This page presents an excerpt from the chapter Sound Event Detection in Everyday Environments, taken from my PhD thesis titled Computational Audio Content Analysis in Everyday Environments.

Sound Event Detection in Everyday Environments

Detection of sound events is required to gain an understanding of the content of audio recordings from everyday environments. Sound events encountered in our everyday environments are often overlapping other sounds in time and frequency, as discussed in the previous chapters. Therefore, polyphonic sound event detection is essential for well-performing audio content analysis in everyday environments.

This chapter goes through the work published in [Mesaros2010, Heittola2010, Heittola2011, Heittola2013a, Heittola2013b, Mesaros2016, Mesaros2018]. These publications are dealing with various aspects of polyphonic detection system: forming audio datasets, evaluating the detection performance, training acoustic models from mixture signals, detecting overlapping sounds, using contextual information, and organizing evaluation campaigns related to sound event detection.

Problem Definition

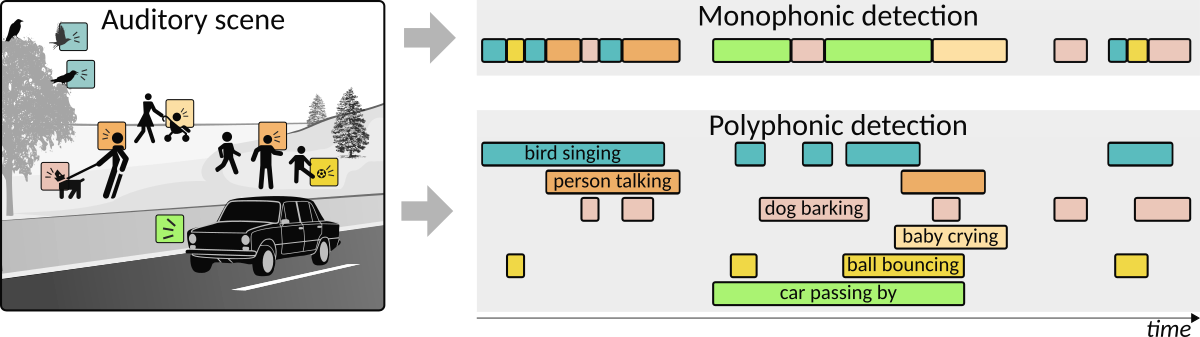

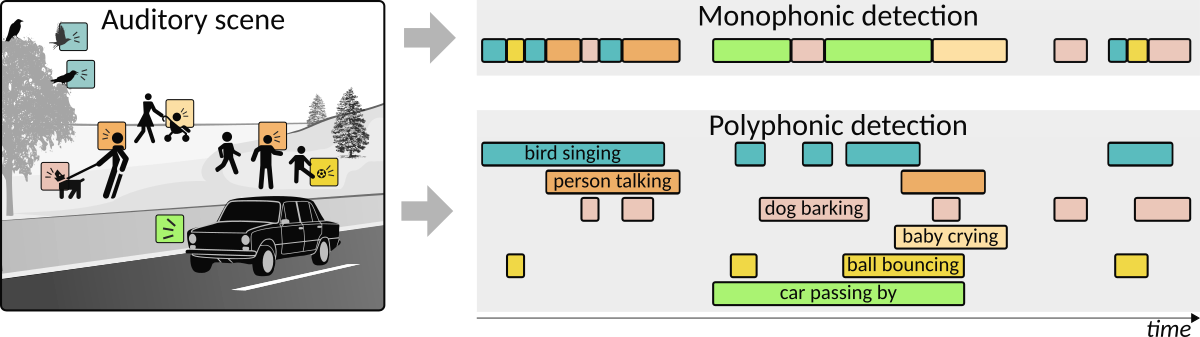

Sound event detection aims to simultaneously estimate what is happening and when it is happening. In other words, the aim is to automatically find a start and end time for a sound event and associate a textual class label for the sound event. The detection can be done either by outputting the most prominent sound event at the time (monophonic detection) or by outputting also other simultaneously active events (polyphonic detection). Examples of both types of detection are shown in Figure 4.1. Monophonic detection captures a fragmented view of the auditory scene, long sound events could be split in the detection into smaller events, and quieter event in the background might get covered by louder events and not get detected at all. Sound events detected with monophonic detection scheme might be sufficient for certain applications, however, for general content analysis, the polyphonic detection is often required.

Figure 4.1

Illustration on how monophonic and polyphonic sound event detection captures the events in the auditory scene.

Input to the detection system is acoustic features \(\boldsymbol{x}_{t}\) which are extracted in each time frame \(t\) for the input signal. Aim is to learn an acoustic model able to estimate presence of predefined sound event classes at each time frame \(\boldsymbol{y}_{t}\). The model learning is done based on learning examples: audio recordings along with annotated sound event activities. The sound event class presence probability at the time frame is given by posterior probability \(p(\boldsymbol{y}_{t}|\boldsymbol{x}_{t})\). The probabilities are converted into binary class activity per frame, event roll, and sound event onset and offset time stamps are acquired based on consecutive active frames. The output of the system is usually formatted as a list of detected events, event list, containing a class label with onset and offset timestamps for each event.

Challenges

The main challenges faced in the development of a robust polyphonic SED are related to characteristics of everyday environments and everyday sounds. When the main part of the work included in this theses was done, the additional challenges were related to the dataset quality, amount of examples in the dataset, and lack of established benchmark datasets and evaluation protocols to support the SED system development.

Simultaneously occurring sound events in the auditory scene produce a mixture signal, and the challenge of the SED system is to be able to detect individual sound events from this signal. The detection should be focusing only on certain sound event classes while being robust against the interfering sounds. As the number of sound event classes that can be realistically used in the SED system is much lower than the actual number of sound sources in most of the natural auditory scenes, some amount of these overlapping sounds will be always unknown to the SED system and can be considered to be interfering sound. In a real use case, the position of the sound-producing source in relation to the audio capturing microphone cannot be controlled, leading to varying loudness levels of the sound events and together with overlapping sounds challenging signal-to-noise ratios in the captured audio recordings. The variability in the acoustic properties of the environments (e.g. room acoustics, or reverberation), will further contribute to the diversity of audio material.

Sound instances assigned with the same sound event label have often a large intra-class variability. This is due to the variability in the sound-producing mechanisms of the sound events, and this variability should be taken into account when learning the acoustic models for the sound events to produce a well-performing SED system. Ideally, one needs a large set of learning examples to fully cover the variability of the sounds in the model learning, however, in practice for most use cases it is impossible to collect such dataset. Thus the robust acoustic modeling of the sound events with limited amount of learning examples is one of the major challenges. Sound events occurring in natural everyday environments are connected through the context they appear, however, the temporal sequence of these events seldom follows any strict structure. This unstructured nature of the audio presents an extra challenge for the SED system design compared to speech recognition or music context retrieval systems where the analysis can be steered with structural constrains of the target signal.

The development of the robust SED system is dependent on the dataset quality and the number of examples per sound event class available in the dataset. The collection of data is relatively easy, however, manual annotation is a subjective process and this poses a challenge for the learning and evaluation of the system. In the annotation stage, the person is listening to the recordings and manually indicating the onset and offset timestamps of the sound events, and selecting the appropriate textual label to describe the sound event. As the amplitude envelopes of the sound events are often quite smooth, clear change points are hard to determine and this temporal ambiguity will eventually lead some degree of subjectivity in the onset/offset annotations. Furthermore, the selection of the textual label involves the listener’s own prior experiences, and if free label selection is allowed, sounds will be labeled in a varying manner across annotators. Even the same annotator might label similar sounds differently depending on the context the sound event occurred. These subjective aspects of the annotation process produce noisy reference data, which has to be taken into account in the development and evaluation of the SED systems.

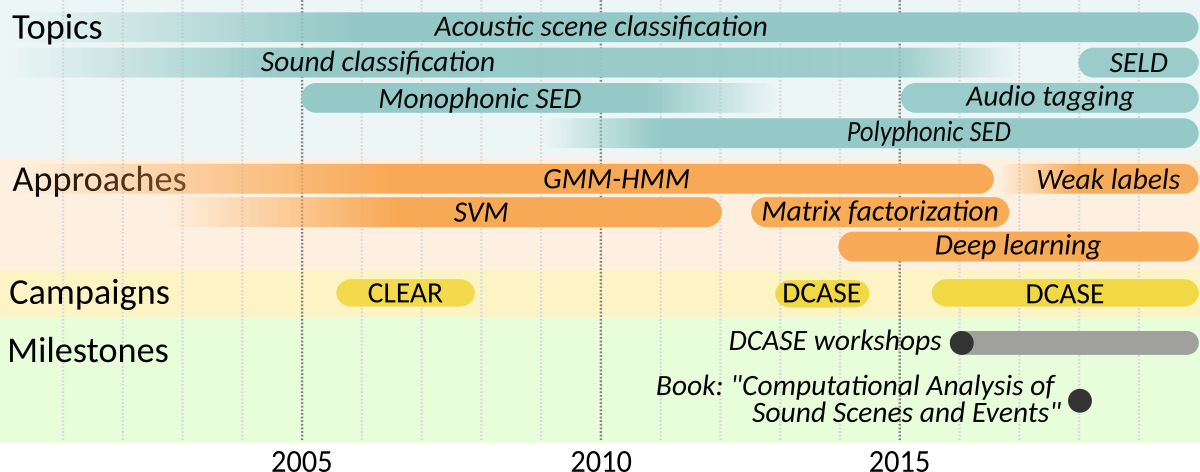

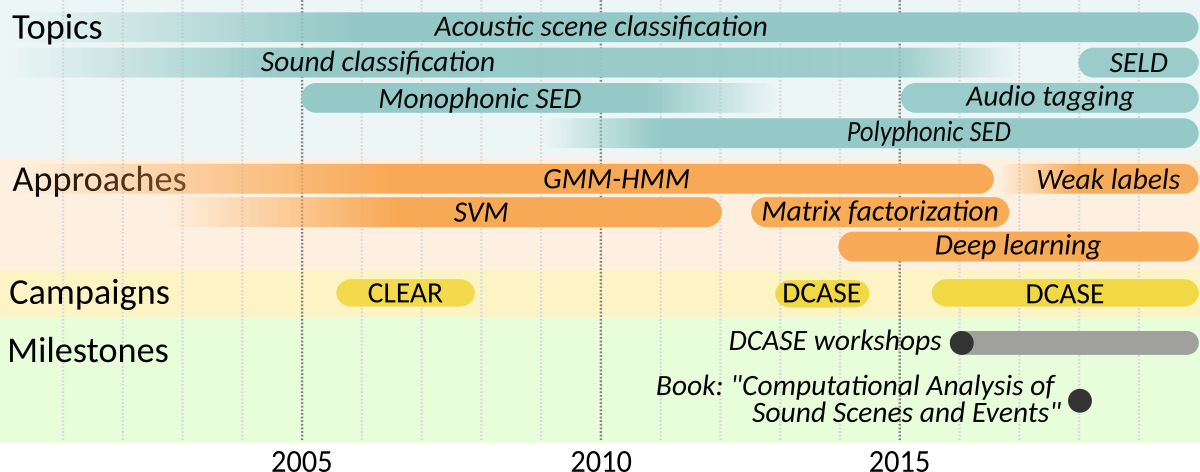

The research effort related to audio content analysis in everyday environments has been steadily increasing over the last ten years. The works in this field can be divided into five major research topics: acoustic scene classification (ASC), sound event classification, audio tagging, sound event detection, and joint sound event localization and detection (SELD). The research history of this field together with the works included in this thesis are illustrated on the timeline in Figure 4.2. This section discusses the related work including research tasks, approaches, evaluation campaigns, and milestones.

Figure 4.2

Research timeline in the field of audio content analysis in everyday environments indicating major trends in research topics and approaches and highlighting evaluation campaigns and major milestones.

Research Field

Audio content analysis in everyday environments has existed as a separate and identifiable research field only in the last 10-15 years. Preliminary research work falls mostly under Computational Auditory Scene Analysis (CASA), where computational methods mimicking the human auditory system are used to derive properties of individual sounds in mixture signals and group them into sound events [Ellis1996, Szabo2016]. The early research in the field is inspired predominantly by speech recognition and music information retrieval, and the approaches are based on the traditional supervised machine learning methods such as GMM [Kumar2013], HMM [Zhou2007], and SVM [Zhuang2010]. The contemporary work is based on deep learning [Cakir2017], and the approaches are influenced strongly by general machine learning development across major research domains such as speech recognition, computer vision, and natural language processing. Whereas the early works utilized limited sized strongly labeled datasets in the training and evaluation, the contemporary works employ substantially more extensive datasets containing possibly weakly labeled data.

Evaluation campaigns have had a substantial role in the growth of the research field in recent years by standardizing the evaluation protocols, establishing evaluation metrics, publishing open benchmark datasets, and providing an easy access platform for researchers from neighboring research fields. The first evaluation campaign to include tasks related to the analysis of everyday environments was the Classification of Events, Activities, and Relationships (CLEAR) Evaluation organized in 2006 and 2007 [Stiefelhagen2006]. The campaign presented monophonic sound event detection and sound event classification tasks in the meeting room environment while using a multi-microphone setup [Temko2006a, Stiefelhagen2007]. A community-driven international challenge, the Detection and Classification of Acoustic Scenes and Events (DCASE), was organized first in 2013 [Stowell2015] and has been organized annually since 2016 [Mesaros2018]. The challenge attracts annually 200-400 system submissions from 60-130 international research teams. The new topics are being introduced at each edition to spark new research and to foster ongoing research in the research field. Acoustic scene classification and sound event detection have been the core topics for each challenge edition, and different research questions related to these topics have been addressed by varying the task setups. The DCASE challenge uses public datasets, and these datasets have gained extensive popularity outside the evaluation campaigns as well.

The annual DCASE Workshop, organized since 2016, has had an instrumental role in the growth of the research field by providing a focused and peer-reviewed publication platform for the community [DCASE2016Workshop, DCASE2017Workshop, DCASE2018Workshop, DCASE2019Workshop]. The first and currently the only book to cover topics broadly in the research field was “Computational Analysis of Sound Scenes and Events“ edited by T. Virtanen et al. [Virtanen2018]. The book can be considered as one of the milestones in the field, as it exclusively focuses on content analysis of environmental audio and comprehensively goes through research topics related to it while describing the current state-of-the-art methods.

Everyday Sound Recognition

Works related to the recognition of everyday sounds can be categorized roughly into two groups; ones aiming at recognizing acoustic scenes, and ones aiming at recognizing individual sounds or sound events.

Acoustic scene classification is a task where a textual label identifying the environment is assigned to an audio segment [Barchiesi2015]. In addition to ASC, the task is referred in the literature as computational auditory scene recognition [Peltonen2002] or as audio-based context recognition [Eronen2006]. The main application for the ASC is context awareness, the ability to determine the context around the device, and to self-adjust the operation mode of the device accordingly. The task can be set either as a closed-set classification task where all scene classes are assumed to be known in advance or an open-set classification task where unknown scene classes may be encountered as well while the system is running [Mesaros2019a]. Early approaches for the task were based on traditional supervised machine learning methods such as GMM [Peltonen2002] and HMM [Eronen2006] using spectral features such as MFCCs. DCASE2013 introduced ASC as an evaluation campaign task [Stowell2015]. A clear trend emerging among the best performing submissions for the task was the usage of the temporal information in the acoustic features within the medium-long time segments (400 ms - 4 s) as features [Roma2013, Rakotomamonjy2015, Geiger2013]. Deep learning approaches surfaced by the DCASE2016 challenge, where almost half of the submission used FNNs, CNNs, or RNNs [Mesaros2018]. However, due to the limited datasets at the time, the classical learning methods such as SVM and factor analysis methods such as NMF performed respectably well against deep learning methods. Along with the bigger datasets introduced in the later DCASE challenges, deep learning methods have outperformed the classic approaches with a clear margin. State-of-the-art datasets contain recordings captured with a few types of recording devices simultaneously in a large selection of locations. Current state-of-the-art systems usually utilize the convolutional neural networks as an acoustic model to take advantage of the 2D structure of the time-frequency representation of the audio input [Abesser2020]. Furthermore, these systems tend to use a multitude of data augmentation techniques to diversify the learning examples and to produce a more robust acoustic model capable of dealing with different recording conditions, devices, and locations. The current state-of-the-art systems can surpass human recognition accuracy in individual cases where the test subject is not familiar with the specific environment (so-called non-expert listener) [Mesaros2017a].

Sound classification and tagging task aims to assign one or multiple textual labels to an audio segment, labels that describe the sound-producing events active within the segment. In case only a single label at a time can be assigned to an audio segment, the task is usually referred to as a sound event classification or environmental sound classification. The task is closely related to sound event detection, as the tagging or classification in short time-segments can be easily used to produce detection output. For example, sound event tagging and classification can be applied in audio based monitoring systems within fixed time-segments to produce activity information of specific sound events [Bello2019]. Early works on this topic focused on a small selection of sound classes related to a single environment such as kitchen [Kraft2005], bathroom [Chen2005], office [Tran2011], or meeting room [Temko2006b], whereas the more recent works have expanded both the selection of classes and environments used in the development [Piczak2015, Hershey2017 ]. Techniques for the task follow the same main trends as for acoustic scene classification. The early works are based on classic machine learning approaches such as SVM and HMM, while the more recent works are based on deep learning methods such as CNN and CRNN. Some of the recent works have moved away from traditional handcrafted features such as MFCCs or mel-band energies by applying feature learning techniques [Salamon2015] or using an end-to-end classification scheme [Tokozume2017]. Similarly to ASC, data augmentation techniques are widely used in the state-of-the-art systems [Salamon2017].

Sound Event Detection

Sound event detection is a task where sound events are temporally located and classified in an audio signal at the same time: for each detected sound event instance an onset and offset are determined and a textual label describing the sound is assigned. Works related to sound event detection can be roughly categorized based on the complexity of the system output into monophonic and polyphonic sound event detection approaches. The main applications for sound event detection include audio-based monitoring, multimedia access, and human activity analysis. These applications are very much related to general audio content analysis applications discussed earlier in Introduction page. Event detection can be applied in monitoring and surveillance systems that require detailed information about event onset and offset timestamps in addition to event labels [Crocco2016]. Example use cases for monitoring are the detection of specific events such as glass breaking [Mesaros2019b], gunshots [Clavel2005], screams [Valenzise2007], or footsteps [Atrey2006]. As content-based analysis for multimedia, sound event detection can be used to automatically generate keywords [Jansen2017, Takahashi2018] for large repositories and further enable content-based indexing and search functionality. In human activity analysis, SED can be used to detect individual sound events which are associated with certain activity [Chahuara2016]. Research done in sound event detection also supports and complements the research in many neighboring fields such as bioacoustics where it is used to assess the biodiversity [Gasc2013], and in robotics to facilitate human-robot interaction [Do2016].

Early approaches for sound event detection were based on traditional pattern recognition techniques like GMM [Vacher2004, Zhuang2009], HMM [Zhou2007, Heittola2008], and SVM [Temko2007, Temko2009]. These works usually focused on cases where sound events were encountered mostly as a sequence of individual sounds with silence or background noise between them, and use cases with a relatively small number of classes. Many of these works are related to early evaluation campaigns such as CLEAR 2006 and 2007 [Temko2006, Stiefelhagen2008], and DCASE2013 [Stowell2015]. Starting about one decade ago, the specific tasks tackled in SED became more diverse and complex: the number of event classes has increased [Mesaros2010], detection of overlapping sound event has been studied using synthetic [Lafay2017] and real data [Mesaros2018], and imbalanced data has been studied as a task of rare sound event detection [Mesaros2019b].

The more recent works are based on deep learning methods such as CNN [Kong2019] and CRNN [Cakir2017], and similarly to recent sound recognition approaches, data augmentation techniques [Parascandolo2016] are often used to increase data diversity. Commonly these works use handcrafted acoustic features such as mel-band energies, while some work has been done to learn automatically better representations from the spectrogram [Salamon2015] or directly from the raw audio signal [Cakir2018]. As an alternative to data augmentation, transfer learning techniques are used to cope with the lack of sufficient data. In these techniques, a neural network based model is trained to solve a pretext task using large amount of data, then the outputs of the pretrained model are used to produce new features, embeddings, to be used in the actual target task [Cramer2019, Jung2019]. The deep learning-based approaches have highlighted a need for large open-access datasets. First sound event datasets were individual research group efforts [Mesaros2016, Mesaros2017b] containing manually annotated event onsets and offsets. As the datasets got larger, such detailed annotations became impractical to produce. Most recent datasets contain only recording-level annotations of sound event activity (weak annotation), which allows producing annotated data easier at larger volumes. Instead of using expert annotators, the annotation effort has been further decreased by using crowd-sourced non-expert annotators [Fonseca2019], possibly automatically tagging segments and get them verified by annotators [Gemmeke2017]. Data collected this way can be considered noisy because some event labels may be incorrect or missing, and weak because it does not contain onset and offset timestamps for the sound events. Learning from such data requires special techniques to compensate for the unreliability of the event labels (Fonseca et al. 2019), and weakly-supervised learning approaches [Kumar2016, Xu2018] for being able to train detection system.

Audio Datasets

The work included in this thesis is based on three datasets: Sound Effects 2009, TUT-SED 2009, and TUT-SED 2016. All of these datasets were collected and annotated for sound event detection research in the Audio Research Group at Tampere University. The information about these datasets is summarised in Table 4.1.

Table 4.1 Information about the datasets used in this thesis.

|

Meta data |

Audio data |

|

| Dataset |

Used in |

Event instances |

Event classes |

Scene classes |

Files |

Length |

Notes |

| Sound Effects 2009 |

[Mesaros2010] |

1359 | 61 | 9 |

1359 | 9h 24min |

Isolated sounds,

Proprietary dataset |

| TUT-SED 2009 |

[Mesaros2010, Heittola2010, Heittola2011, Heittola2013a, Heittola2013b] |

10040 | 61 | 10 |

103 | 18h 53min |

Continuous recordings,

Proprietary dataset |

| TUT-SED 2006, Development |

[Mesaros2016, Mesaros2018] |

954 | 18 | 2 |

22 | 1h 18min |

Continuous recordings,

Open dataset |

| TUT-SED 2006, Evaluation |

[Mesaros2018] |

511 | 18 | 2 |

10 | 35min |

Continuous recordings,

Open dataset |

Sound Effects 2009

For work in [Mesaros2010], a collection of isolated sounds was collected from a commercial online sound effects sample database (Sound Ideas samples through StockMusic.com). The samples were originally captured for commercial audio-visual productions in a close-microphone setup having relatively minimal background ambiance presence. Samples were collected from nine general contextual classes: crowd, hallway, household, human, nature, office, outdoors, shop, vehicles. In total the dataset comprises 1359 samples belonging to 61 distinct classes.

TUT-SED 2009

The TUT-SED 2009 dataset was the first sound event dataset having real-life continuous recordings captured in large amount of common everyday environments. The dataset was manually annotated with strong labels. This dataset was used in the majority of the works included in this thesis [Mesaros2010, Heittola2010, Heittola2011, Heittola2013a, Heittola2013b]. The dataset is a proprietary data collection, and cannot be shared outside the University of Tampere. The data was originally collected as part of an industrial project where the public release was never the aim. As a result, permissions for public data release were not asked from persons present in the recorded scenes. The aim of the data collection was to have a representative collection of audio scenes, and recordings were collected from ten acoustic scenes. Typical office work environments were represented in the data collection with office and hallway scenes. The street, inside a moving car, and inside a moving bus scenes represented typical urban transportation scenarios, whereas the grocery shop and restaurant scenes represented typical public space scenarios. Examples of leisure time scenarios were represented by beach, in the audience of basketball game, and in the audience of track and field event scenes.

For each scene type, a single location with multiple recording positions (8-14 positions) was selected as the aim of the data collection was to see how well material from a tightly focused set of acoustic scenes could be modeled. Each recording was 10-30 minutes long to capture a representative set of events in the scene. In total, the dataset consists of 103 recordings totaling almost 19 hours. The audio recordings were captured using a binaural recording setup, where a person is wearing in-ear microphones (Soundman OKM II Classic/Studio A3) in his/her ears during the recordings. Recordings were stored in a portable digital recorder (Roland Edirol R-09) using a 44.1kHz sampling rate and 24-bit resolution.

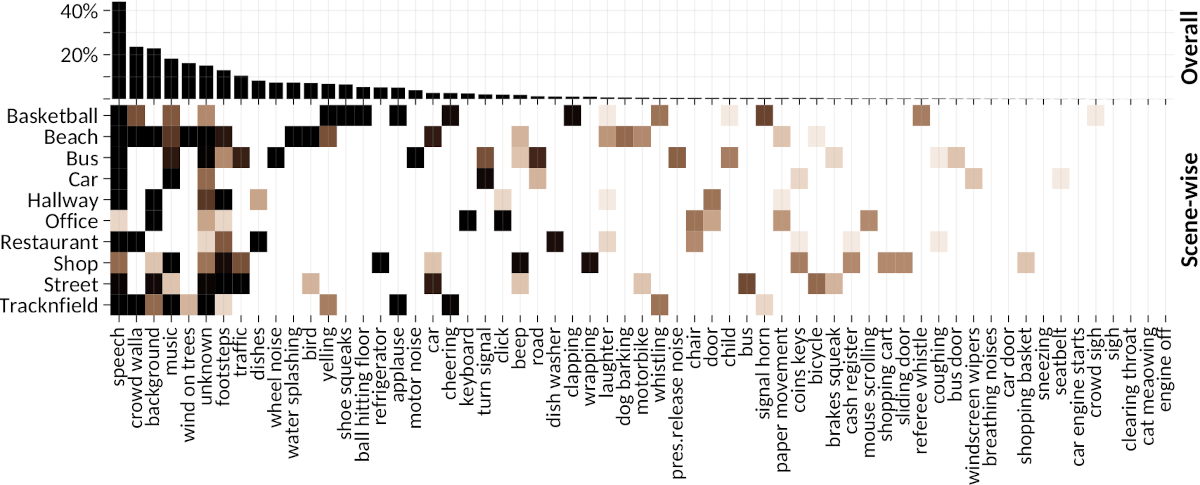

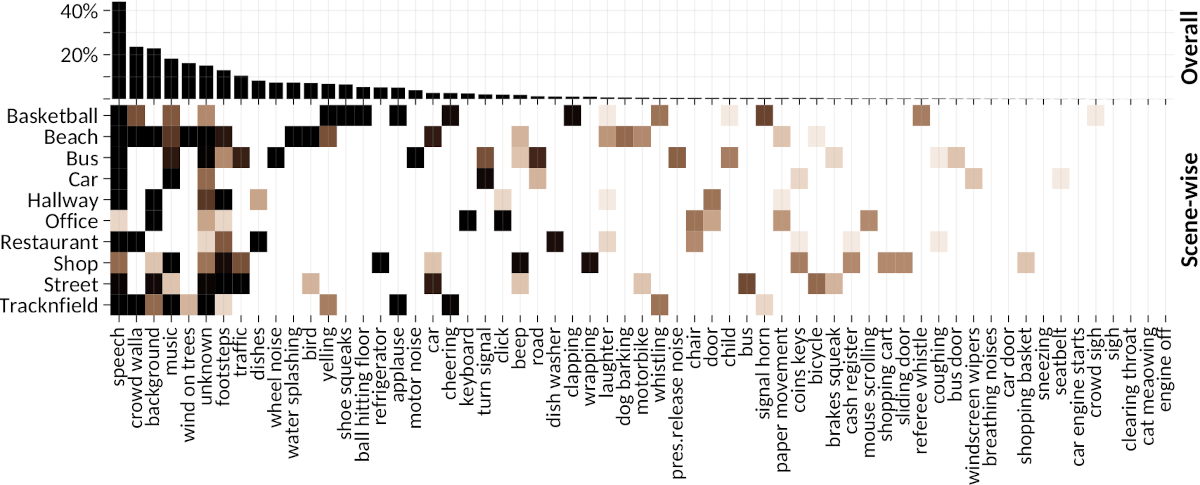

All the recordings were manually annotated by indicating onset and offset timestamps of events and assigning a descriptive textual label for the sound events. The annotations were done mostly by the same person who did the recordings to ensure as detailed as possible annotations: the annotator had some prior knowledge of the auditory scene to help identify the sound sources. A low-quality video was captured during the audio capture to help annotation of complex scenes with a large variety of sound sources (e.g. street environment) by helping the annotator to recall the scene better while doing the annotation. Due to the complexity of the material and the annotation task, the annotator first made a list of active events in the recording, and then annotated temporal activity for these events within the recording. The event labels for the list were freely chosen instead of using predefined set of global labels. This resulted in a large set of labels which were then manually grouped into 61 distinct event classes after the whole dataset was annotated. On average, there was 2.7 simultaneous sound events active at all times in the recordings. In the grouping process, labels describing the same or very similar sound events were pooled under the same event class, for example, “cheer” and “cheering”, and “barcode reader beep” and “card reader beep”. Only event classes containing at least 10 examples were taken into account, while more rare events were collected into a single class labeled as “unknown”. Figure 4.3 illustrates the relative amount of event activity per class for the whole dataset as well as per scene. Each scene class has 14 to 23 active events, and many event classes appear in multiple scenes (e.g., speech), while some event classes are highly scene-specific (e.g., referee whistle in basketball games). For example, “speech” events covers 43.9% of the recorded time in the dataset. Overall, the activity amount of event classes is not well-balanced as expected for natural everyday environments.

Figure 4.3

Event activity statistics for TUT-SED 2009 dataset. Event activity is presented as percentage of active event time versus overall duration. Upper panel shows overall event activity, while lower panel shows scene-wise event activity.

TUT-SED 2016

The creation of the TUT Sound events 2016 dataset (TUT-SED 2016) was motivated by the lack of an open dataset with high acoustic variability [Mesaros2016]. The data collection was implemented in 2015-2016 under the European Research Council funded Everysound project, and the recording locations were selected from Finland. To ensure high acoustic variability of the captured audio each recording was done in a different location: different streets, different homes. Compared to the TUT-SED 2009 dataset, the TUT-SED 2016 dataset has two scene classes (indoor home environments and outdoor residential areas) and larger acoustic variability within the scene classes. The recording setup was similar to TUT-SED 2009, binaural in-ear microphone setup with the same microphone model and digital recorder device with the same format settings (44.1 kHz and 24-bit). The duration of recordings were set to 3-5 minutes considering this would be the most likely length that someone would record in a real use case. The person recording was required to keep the body and head movement minimum during the recording to enable the possible use of spatial information present in binaural recordings. Furthermore, the person was instructed to keep the amount of his/her own speech to a minimum to avoid near-field speech.

The sound events in the recordings were manually annotated with the onset and offset timestamps, and freely chosen event label. A noun-verb pair was used as an event label during the annotation (e.g. “people; talking” and “car; passing”); nouns were used to characterize the sound source while verbs to characterize the sound production mechanism. Recordings and annotations were done by two research assistants, each annotated the material he/she recorded and they were instructed to annotate all audible sound events in the scene. In the post-processing stage, recordings were annotated for microphone failures and interference noises caused by mobile phones, and this was stored as extra meta information. Sound event classes used in the published dataset were selected based on their frequency in the raw annotations and the number of different recordings they appeared in. The event labels that were semantically similar given the context were mapped together to ensure distinct classes. For example, “car engine; running” and “engine; running” were mapped together, and various impact sounds such as “banging” and “clacking” were grouped together under “object impact”. This resulted in a total of 18 sound classes, each having sufficient amount of examples for learning acoustic models.

The dataset was used in DCASE Challenge 2016 task for sound event detection in real life audio [Mesaros2018], and the data was released in two datasets: development dataset and evaluation dataset. The development dataset was bundled with 4-fold cross-validation setup, while the evaluation dataset was originally released without reference annotations and later updated with reference annotations after the evaluation campaign.

During the data collection campaign a large amount of recordings from 10 scene types were collected, and only a small subset was published in the TUT-SED 2016 dataset [TUTSED2016DEV, TUTSED2016EVAL]. Later, more material was annotated in a similar fashion, and released as TUT-SED 2017 dataset [TUTSED2017DEV, TUTSED2017EVAL] for the DCASE challenge 2017 [Mesaros2019b]. This dataset contained recordings from a single scene class (street), and had relatively small number of target sound event classes (6). The rest of the material was released without sound event annotations as datasets for acoustic scene classification tasks: TUT Acoustic Scenes 2016 (TUT-ASC 2016) [TUTASC2016DEV, TUTASC2016EVAL] for DCASE challenge 2016 [Mesaros2016] and TUT Acoustic Scenes 2017 (TUT-ASC 2017) [TUTASC2017DEV, TUTASC2017EVAL] for DCASE challenge 2017 [Mesaros2019b].

Evaluation

The quantitative evaluation of the SED system performance is done by comparing the system output with a reference available for the test data. For the datasets used in this thesis, the reference was created by manually annotating the audio material (see about annotations) and storing the annotations as a list of sound event instances with associated textual label and temporal information (onset and offset timestamps). The evaluation takes into account both the label and the temporal information. When dealing with monophonic annotations and monophonic SED systems, the evaluation is straightforward as the system output at a given time is correct if the predicted event class coincides with the reference class. However, in the case of polyphonic annotations and polyphonic SED systems, the reference can contain multiple active sound events at a given time and there can be multiple correctly and erroneously detected events at the same time instance. All these cases have to be counted for the metric. The evaluation metrics for polyphonic SED can be categorized into segment-based and event-based metrics depending on how the temporal information is handled in the evaluation. This section is based on work published in [Heittola2013a, Heittola2011, Mesaros2016b], and it addresses the research question on how to evaluate sound event detection systems with polyphonic system output.

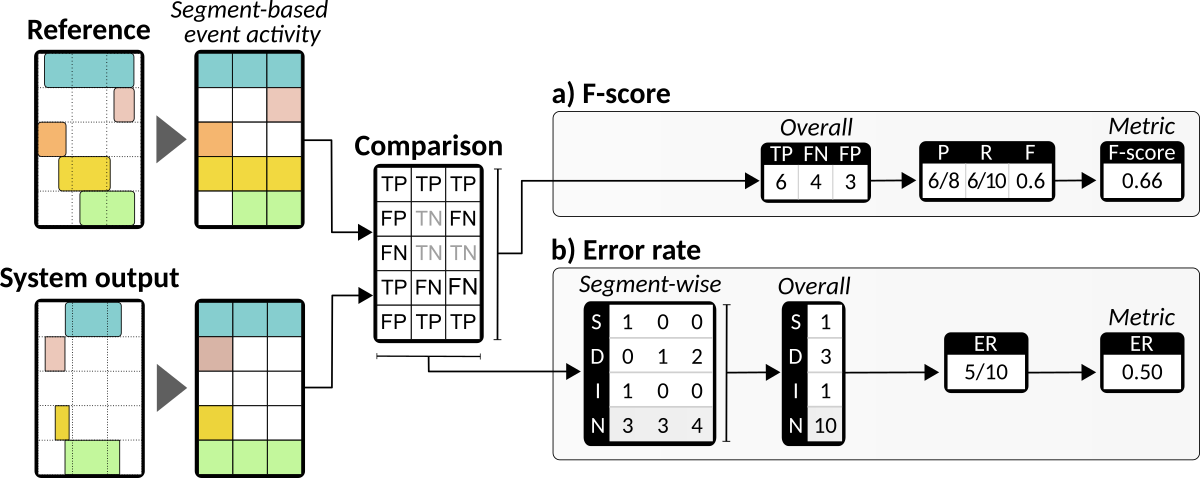

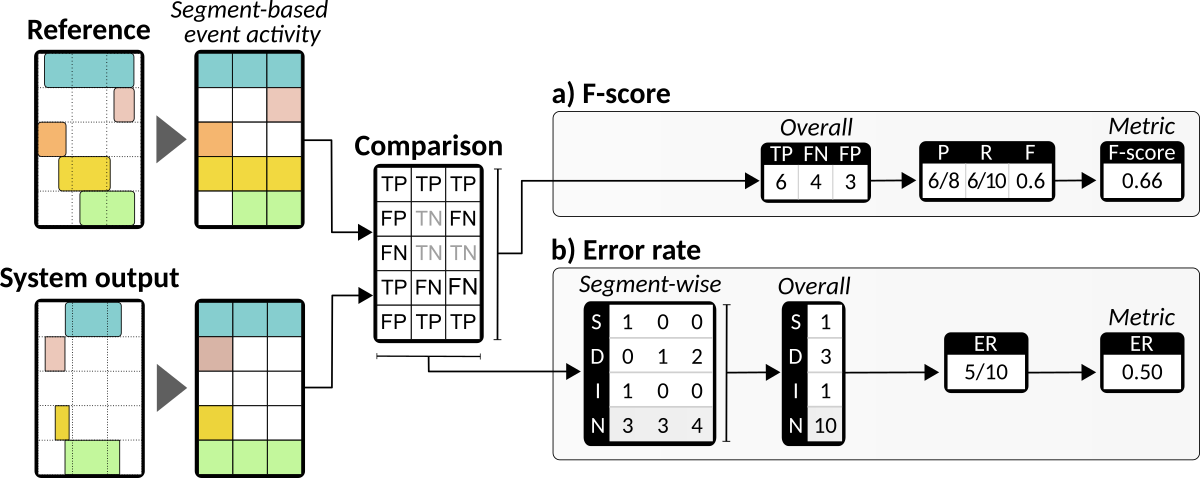

Segment-Based Metrics

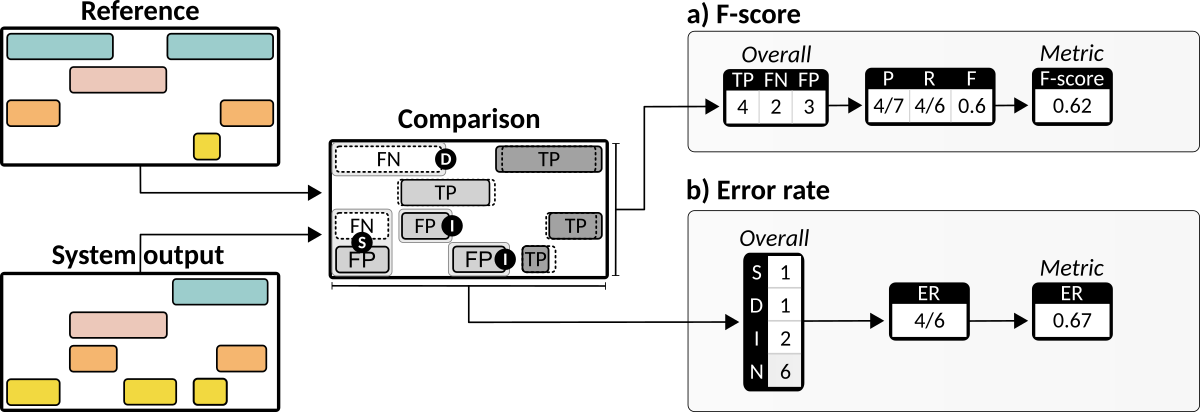

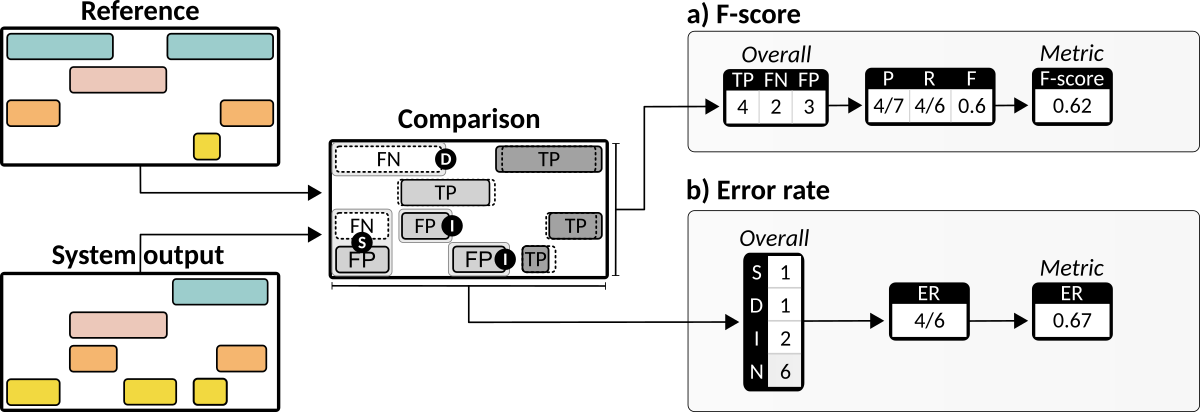

The first segment-based metric for polyphonic sound event detection, called block-wise F-score, was introduced and used in . In (Annamaria Mesaros, Heittola, and Virtanen 2016a) this metric was formalized as segment-based F-score, and segment-based error rate (ER) was introduced to complement it. These metrics have since become the standard metrics in the research field, and they have been used in many DCASE challenge tasks as ranking criteria. In this thesis, the segment-based F-score is used as performance measurement in , and segment-based ER is used as metric in . In the segment-based evaluation, the intermediate statistics for the metric are calculated in a fixed time grid, often in one-second time segments. An illustrative example showing metrics calculation is shown in Figure 4.4.

Figure 4.4

Calculation of two segment-based metrics: F-score and error rate. Comparisons are made at a fixed time-segment level, where both the reference annotations and system output are rounded into the same time resolution. Binary event activities in each segment are then compared and intermediate statistics are calculated.

The sound event activity is compared between the reference annotation and the system output in fixed one-second segments. The event is considered correctly detected if both reference and output indicate event activity and this case is referred to as true positive. In case the system output indicates an event to be active within the segment but the reference annotation indicates the event to be inactive, the output is considered as a false positive within the time segment. Conversely, in case the reference indicates the event to be active within the segment, and the system output indicates inactivity for the same event class, the output is considered as a false negative. Total counts of true positives, false positives and false negatives are denoted as \(TP\), \(FP\), and \(FN\).

F-score

Segment-based F-score is calculated by first accumulating the intermediate statistics over evaluated segments for all classes and then summing them up to get overall intermediate statistics (instance-based metric, micro-averaging). The precision \(P\) and recall \(R\) are calculated according to the overall statistics as

$$ \label{eq-segment-based-precision-and-recall}

P = \frac{{TP}}{{TP}+{FP}}\,\,,\quad R = \frac{{TP}}{{TP}+{FN}} \tag{4.1} $$

and the F-score:

$$ \label{eq-segment-based-fscore}

{{F}}=\frac{2\cdot{P}\cdot{R}}{{P}+{R}}=\frac{2\cdot{TP}}{2\cdot{TP}+{FP}+{FN}} \tag{4.2} $$

The calculation process is illustrated in panel (a) of Figure 4.4.

The F-score is a widely known metric and easy to understand, and because of this, it is often the preferred metric for SED evaluation. The magnitude of the F-score is largely determined by the number of true positives, which is dominated by the system performance on the large classes. In this case, it may be preferable to use class-based averaging (macro-averaging) as overall performance measurement, which means calculating F-score for each class based on the class-wise intermediate statistics, and then averaging the class-wise F-scores in order to get a single value. However, this requires the presence of all classes in the test material to avoid classes with undefined recall (\(TP + FN = 0\)). This calls for extra attention when designing experiments in a train/test setting, especially when using recordings from uncontrolled everyday environments. In this thesis the segment based F-score is used with two different segment lengths, and is denoted as \(F_{seg,1sec}\) for 1-second segments lengths and as \(F_{seg,30sec}\) for 30-second lengths.

Error Rate

Error rate (ER) measures the amount of errors in terms of substitutions (\(S\)), insertions (\(I\)), and deletions (\(D\)) that are calculated in a segment-by-segment manner. In the metric calculation, true positives, false positives, and false negatives are counted in each segment and based on these counts substitutions, insertions, and deletions are then calculated in each segment. In a segment \(k\), the number of substitution errors \(S\left ( k \right )\) is defined as the number of reference events for which the system outputted an event but with an incorrect event label. In this case, there is one false positive and one false negative in the segment; substitution errors are calculated by pairing false positives and false negatives without designating which erroneous event substitutes which event. Once the substitution errors are counted per segment, the remaining false positives are counted as insertion errors \(I\left ( k \right )\) and false negatives as deletion errors \(D\left ( k \right )\). The insertion errors are attributed to segments having incorrect event activity in the system output, and the deletion errors are attributed to segments having event activity in the reference but not in the system output. This can be formulated as follows:

$$ \begin{aligned}

S\left ( k \right ) &= \min \left ( FN\left ( k \right ), FP\left ( k\right ) \right ) \\

D\left ( k \right ) &= \max \left ( 0, FN\left ( k\right ) - FP\left ( k\right )\right ) \\

I\left ( k \right ) &= max \left ( 0, FP\left ( k\right ) - FN\left ( k\right )\right ) \label{eq-segment-based-er-intermediate}\end{aligned} \tag{4.3} $$

The error rate is calculated then by summing the segment-wise counts for \(S\), \(D\), and \(I\) over the total number of evaluated segments \(K\), with \(N(k)\) being the number of active reference events in segment \(k\) [Poliner2006]:

$$ \label{eq-segment-based-er}

ER=\frac{\sum_{k=1}^K{S(k)}+\sum_{k=1}^K{D(k)}+\sum_{k=1}^K{I(k)}}{\sum_{k=1}^K{N(k)}} \tag{4.4} $$

The metric calculation is illustrated in panel (b) of

Figure 4.4.

The total error rate is commonly used to evaluate the system performance in speech recognition and speaker diarization evaluation, and parallel use in SED makes the metric more approachable for many researchers. On the other hand, interpretation of the error rate can be difficult as the value is a score rather than a percentage and the value can be over 1 if the system makes more errors than correct estimations. An error rate with exact value of 1.0 is also trivial to achieve with the system outputting no active events. Therefore, additional metrics, such as segment-based F-score, should be used together with ER to get a more comprehensive performance estimate for the system.

Event-Based Metrics

The event-based F-score and error rate are used as metrics in [Mesaros2016]. In these metrics, the system output and the reference annotation are compared in an event-by-event manner: intermediate statistics (true positives, false positives, and false negatives) are counted based on event instances. In the evaluation process, an event in the system output is regarded as correctly detected (true positive) if it has a temporal position that is overlapping with the temporal position of an event in the reference annotation with the same label, and its onset and offset meet specified conditions. An event in the system output without correspondence to the reference annotation according to the onset and offset condition is regarded as a false positive, whereas the event in the reference annotation without correspondence to system output is regarded as a false negative.

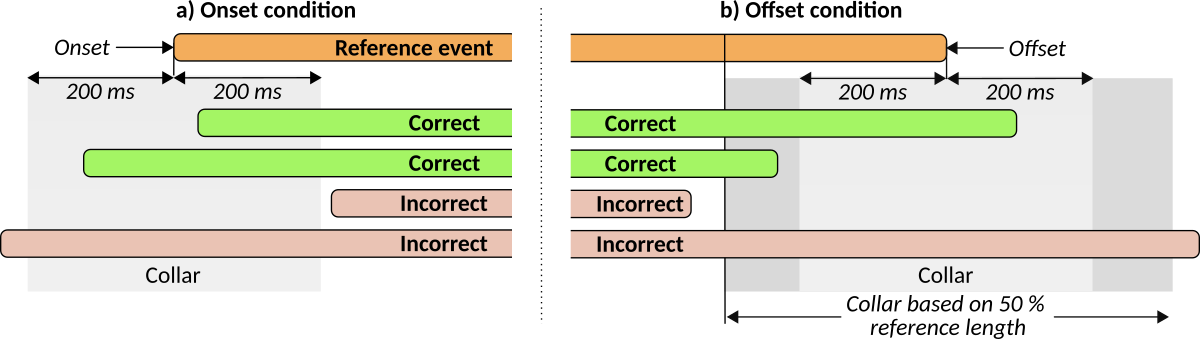

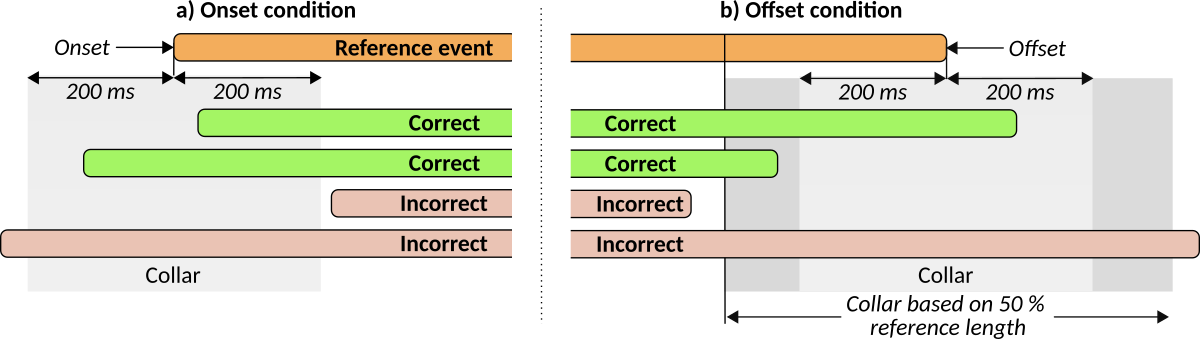

For the true positive, the positions of event onsets and offsets are compared using a temporal collar to allow some tolerance and set the desired evaluation resolution. The manually created reference annotations have some level of subjectivity in the temporal positions of onset and offset (see about annotations) and the temporal tolerance can be used to alleviate the effect of this subjectivity in the evaluation. In [Mesaros2016], a collar of 200 ms was used, while a more permissive collar of 500 ms was used, for example, in DCASE challenge task for rare sound event detection [Mesaros2019b]. The offset condition is set to be more permissive as the exact offset timestamp is often less important than the onset of well-performing SED. The collar size for the offset condition adapts to different event sizes by selecting maximum among the fixed 200 ms collar and the 50% of the current reference event duration to cover the differences between short and long events. Evaluation of event instances based on these conditions is shown in Figure 4.5.

Figure 4.5

The reference event and events in the system output with the same event labels compared based on onset condition and offset condition.

The evaluation can be done solely based on onset condition or based on both onset and offset conditions depending on how the system performance is required to be evaluated. In , both conditions are used together.

The event-based F-score is calculated the same way as the segment-based F-score. The event-based intermediate statistics (\(TP\), \(FP\), and \(FN\)) are counted and summed up to get overall counts. Precision, recall, and F-score are calculated based on Equations 4.1 and 4.2. Same as for segment-based F-score, event-based F-score can be calculated based on total counts (instance-based, micro-average) or based on class-wise performance (class-based, macro-average). The metric calculation is illustrated in Figure 4.6 panel (a). The event-based error rate is defined with respect to the number of reference sound event instances. The substitutions are defined differently than in segment-based error rate: events with the correct temporal position but incorrect class labels are counted as substitutions, whereas, insertions and deletions are assigned for events unaccounted for as correct or substituted in system output or reference. The overall metric is calculated based on these error counts similarly to segment-based metric with Equation 4.3. The metric calculation is illustrated in Figure 4.6 panel (b).

Figure 4.6

Calculation of two event-based metrics: F-score and error rate. F-score is calculated based on overall intermediate statistics counts. Error rate counts total number of errors of different types (substitutions, insertion and deletion).

In comparison to the segment-based metrics, the event-based metrics will usually give lower performance estimate values because it is generally more complicated to match onsets and offsets than overall activity of the event. The event-based metrics measure the ability of the system to detect the correct event in the right temporal position, acting as a measure of onset/offset detection capability. Thus, event-based metrics are the recommended choice for applications where the detection of onsets and offsets of sounds is an essential feature.

Legacy Metrics

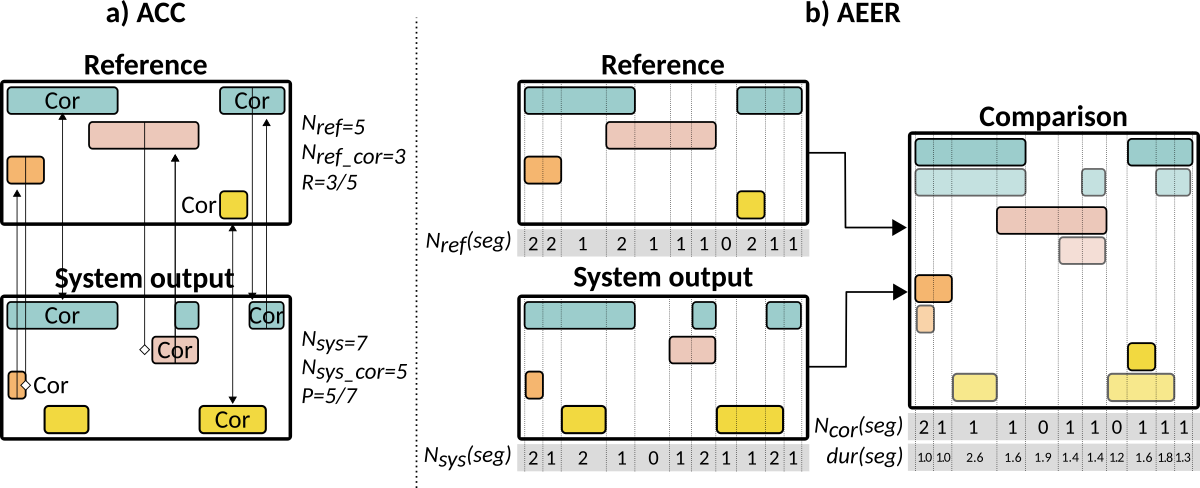

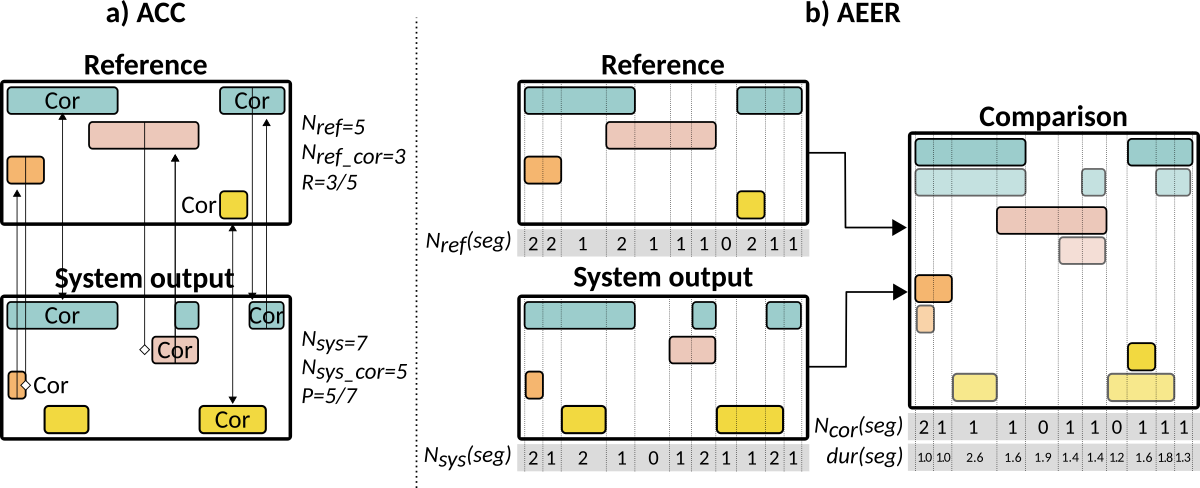

Earlier works included in this thesis, , used metrics defined for the CLEAR 2006 and CLEAR 2007 evaluation campaigns (Andrey Temko et al. 2009). These metrics were evaluated only for known non-speech events, and therefore “speech” and “unknown” events were excluded from the calculations. The first metric originating from CLEAR campaign was defined as a balanced F-score and denoted by ACC. In the evaluation, the outputted sound event was considered to be correctly detected if the temporal center of the event lies between the timestamps of a reference event with the same event class, or if there exists at least one reference event with the same event class whose temporal center lies between the timestamps of the outputted event. Conversely, the reference event was considered to be correctly detected if there was at least one outputted event whose temporal center is situated between the timestamps of reference sound event from the same event class, or if the temporal center of the reference event lies between the timestamps of at least one outputted event from the same event class. The calculation process of the intermediate statistics for the metric is illustrated in Figure 4.7 panel a. The metric was defined as

$$ ACC = \frac{2\cdot P\cdot R}{P+R} \label{eq-f-score} \tag{4.5} $$

with the precision \(P\) and the recall \(R\) defined as

$$ P = \frac{N_{sys\_cor}}{N_{ref}}\,\,,\quad R = \frac{N_{ref\_cor}}{N_{sys}}

\label{eq-CLEAR-precision-and-recall} \tag{4.6} $$

Figure 4.7

Calculation of intermediate statistics for two legacy metrics: ACC and AEER.

The second metric originating from CLEAR campaign considered the temporal resolution of the outputted sound events by using a metric adapted from a speaker diarization task. The metric defined the acoustic event error rate (AEER) expressed as time percentage [Temko2009a]. The metric computes intermediate statistics in adjacent time segments defined by onsets and offsets of the reference and system output events. In each segment (\(seg\)), the number of the events is counted (\(N_{ref}\) and \(N_{sys}\)) along with the correctly outputted events \(N_{cor}\). The intermediate statistic calculation for AEER is illustrated in Figure 4.7 panel b. The overall AEER score is calculated as the fraction of the time that is not attributed correctly to a sound event:

$$ AEER = \frac{\sum\limits_{seg}\left \{ \text{dur}(seg)\cdot\max(N_{\text{ref}},N_{\text{sys}})-N_{\text{cor}}\right \}}{\sum\limits_{seg}\left \{ \text{dur}(seg)\cdot N_{\text{ref}}\right \}}\label{ch4:eq:AEER} \tag{4.7} $$

where \(\text{dur}(seg)\) is the duration of the segment.

The ACC metric can be seen as an event-based F-score where the correctness of the events is defined using centers of events instead of their onsets and offsets. Similarly, the AEER metric can be seen as a segment-based metric calculated in non-constant sized segments. As a result, these metrics are measuring different aspects of performance at different time-scales. This is problematic as neither of them will give sufficient performance measures alone, but their simultaneous usage is not advisable due to their different definition. These shortcomings are alleviated by the new metrics (F-score and ER) for segment-based and event-based measurement defined in [Mesaros2016b]. These metrics were defined using the same temporal resolution, and even though individually they still provide only an incomplete view of the system performance, they can be used together easily to get a more complete view of the performance. Moreover, the evaluation with AEER is based on non-constant segment lengths determined by the combination of reference events and system output events, making the evaluation segments different from system to system. Error rate defined in [Mesaros2016b] uses uniform segment length and simple rules to determine the correctness of the system output per segment, making the metric easier to understand.