This page presents a slightly revised version of chapter "Computational Audio Content Analysis", from my PhD thesis "Computational Audio Content Analysis in Everyday Environments".

Toni Heittola. Computational Audio Content Analysis in Everyday Environments. PhD thesis, Tampere University, 6 2021.

Computational Audio Content Analysis in Everyday Environments

Abstract

Our everyday environments are full of sounds that have a vital role in providing us information and allowing us to understand what is happening around us. Humans have formed strong associations between physical events in their environment and the sounds that these events produce. Such associations are described using textual labels, sound events, and they allow us to understand, recognize, and interpret the concepts behind sounds. Examples of such sound events are dog barking, person shouting or car passing by. This thesis deals with computational methods for audio content analysis of everyday environments. Along with the increased usage of digital audio in our everyday life, automatic audio content analysis has become a more and more pursued ability. Content analysis enables an in-depth understanding of what was happening in the environment when the audio was captured, and this further facilitates applications that can accurately react to the events in the environment. The methods proposed in this thesis focus on sound event detection, the task of recognizing and temporally locating sound events within an audio signal, and include aspects related to development of methods dealing with a large set of sound classes, detection of multiple sounds, and evaluation of such methods. The work presented in this thesis focuses on developing methods that allow the detection of multiple overlapping sound events and robust acoustic model training based on mixture audio containing overlapping sounds. Starting with an HMM-based approach for prominent sound event detection, the work advanced by extending it into polyphonic detection using multiple Viterbi iterations or sound source separation. These polyphonic sound event detection systems were based on a collection of generative classifiers to produce multiple labels for the same time instance, which doubled or in some cases tripled the detection performance. As an alternative approach, polyphonic detection was implemented using class-wise activity detectors in which the activity of each event class was detected independently and class-wise event sequences were merged to produce the polyphonic system output. The polyphonic detection increased applicability of the methods in everyday environments substantially. For evaluation of methods, the work proposed a new metric for polyphonic sound event detection which takes into account the polyphony. The new metric, a segment-based F-score, provides rigorous definitions for the correct and erroneous detections, besides being more suitable for comparing polyphonic annotation and polyphonic system output than the previously used metrics and has since become one of the standard metrics in the research field. Part of this thesis includes studying sound events as a constituent part of the acoustic scene based on contextual information provided by their co-occurrence. This information was used for both sound event detection and acoustic scene classification. In sound event detection, context information was used to identify the acoustic scene in order to narrow down the selection of possible sound event classes based on this information, which allowed use of context-dependent acoustic models and event priors. This approach provided moderate yet consistent performance increase across all tested acoustic scene types, and enabled the detection system to be easily expanded to new scenes. In acoustic scene classification, the scenes were identified based on the distinctive and scene-specific sound events detected, with performance comparable to traditional approaches, while the fusion of these two approaches showed a significant further increase in the performance. The thesis also includes significant contribution to the development of tools for open research in the field, such as standardized evaluation protocols, and release of open datasets, benchmark systems, and open-source tools.

Computational Audio Content Analysis

Natural sounds present in environmental audio have diverse acoustic characteristics due to a wide range of possible sound-producing mechanisms, and thus it is common that sounds categorized semantically into the same group have largely varying acoustic characteristics. Natural sounds such as animal vocalizations or footsteps have larger diversity than electronically produced sounds such as alarms and sirens. For general audio content analysis where a wide range of natural sounds is targeted, this poses major difficulty when developing the analysis system.

In a well-defined analysis case with a target sound category having a low-level of variation in its acoustic characteristics, one can manually develop a sound detector based on distinguishing characteristics such as sound activity on a specific frequency range (e.g. detecting fire alarms). However, in most practical use cases the analysis system is targeting a larger set of sounds having wider variations in their acoustic characteristics, making manual system development an impractical method. Computational analysis in this case calls for an extensive set of parameters, acoustic features, to be calculated from an audio signal and use of automatic methods such as machine learning [Bishop2006, Murphy2012, Du2013, Goodfellow2016] to learn to differentiate the sound categories based on the calculated parameters. Most of the computational analysis systems presented in the literature use a supervised learning approach where manually labeled sound examples are used to teach the machine learning algorithm to differentiate unknown sounds into target sound categories. The system developer defines sound categories beforehand and collects a sufficient amount of labeled examples from each target sound category to develop and evaluate the system.

Labeling a sufficient amount of examples for supervised learning can sometimes be a laborious process. Active learning approaches can be used to minimize the amount of manual labeling work by letting the learning algorithm select examples for labeling. In this iterative process, the learner selects the best candidates for manual labeling, and these manually labeled examples are then used to improve the learner [Zhao2017, Zhao2018, Zhao2020]. To avoid manual labeling altogether, one can use techniques such as unsupervised learning [Du2013, p. 17] and semi-supervised learning [Du2013, p. 18]. In unsupervised learning, groups of similar examples within the data are discovered and used as training examples for supervised learning. In semi-supervised learning, a small set of manually labeled examples is used to identify similar examples from a larger dataset with unlabeled examples, essentially increasing the amount of usable training material for supervised learning [Zhang2012, Diment2013]. This text concentrates on the supervised machine learning approach and how this approach can be applied to computational audio content analysis.

Content Analysis Systems

In principle, content analysis systems categorize the input audio into predefined sound categories, target sound classes. In the case of multiple target sound classes, the analysis systems can be divided into two groups; systems able to recognize only one sound class at a time and systems able to recognize multiple sound classes at the same time. In literature these are referred to multi-class single-label and multi-class multi-label approaches. The number of target sound classes in the analysis systems can vary widely based on application area from systems concentrating only on two classes (target sound class versus all the other sounds) to systems recognizing tens of classes. Often the number of classes is limited by the available development data, achievable accuracy, and possible computational requirements.

Classification Versus Detection

In case the analysis system outputs information about the temporal activity of the target sounds, the system is said to perform detection, whereas in case the analysis system only indicates whether the target sound is present within the analyzed signal, the system is said to perform classification or tagging, depending whether the system outputs one or multiple classes at the same time. Temporal information contains timestamps for when the sound instance starts, and for when it has ended. In literature, these timestamps are often referred to as onset and offset times.

Applications

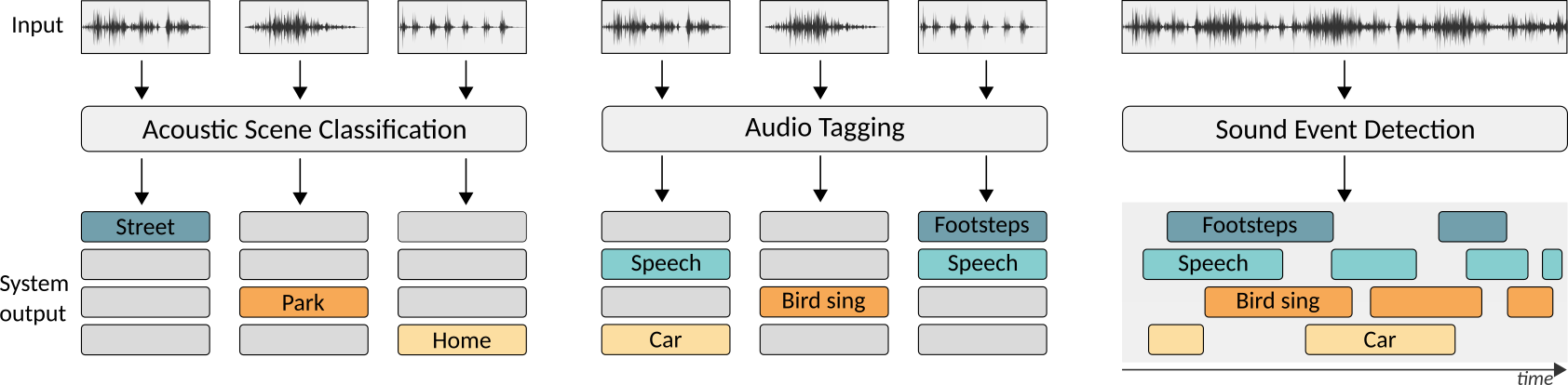

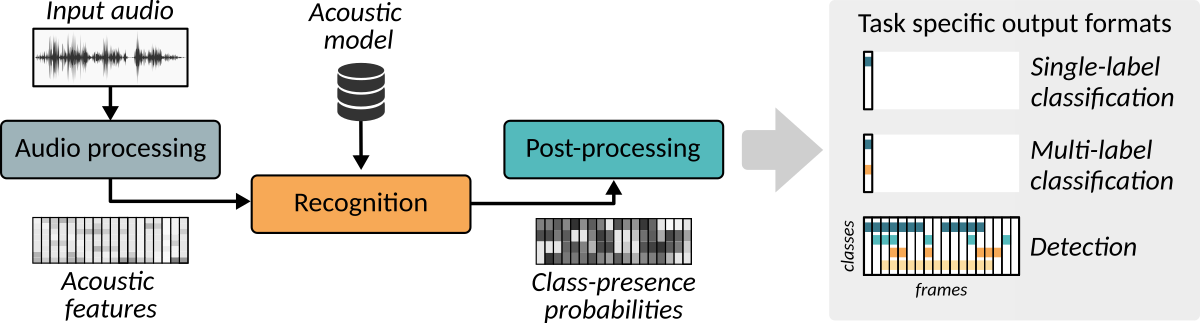

From the application perspective, acoustic scene classification (ASC) is commonly seen as multi-class single-label classification and audio tagging (AT) as multi-class multi-label classification. In sound event detection (SED) applications, multi-class single-label classification is often referred to as monophonic sound event detection and multi-class multi-label classification as polyphonic sound event detection. These application types are illustrated in Figure 3.1.

Figure 3.1 System input and output characteristics for three analysis systems: acoustic scene classification, audio tagging, and sound event detection.

Processing Blocks

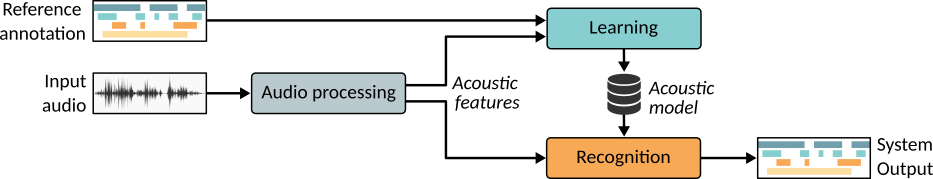

The processing blocks of a typical content analysis system are presented in Figure 3.2.

Figure 3.2 The basic structure of an audio content analysis system.

Input

The input to the system is an audio signal which is captured with a microphone in real-time or read from a stored audio recording.

Audio Processing

The audio processing block performs pre-processing and acoustic feature extraction. Pre-processing is used to enhance characteristics of the audio signal which are essential for robust content analysis or separate target sounds from the background. In the acoustic feature extraction, the signal is represented in a compact form by extracting information sufficient for classifying or detecting target sounds. This usually makes the subsequent data modeling in the learning stage easier with the limited amount of development examples available. Furthermore, the compact feature representation makes the data modeling computationally cheaper.

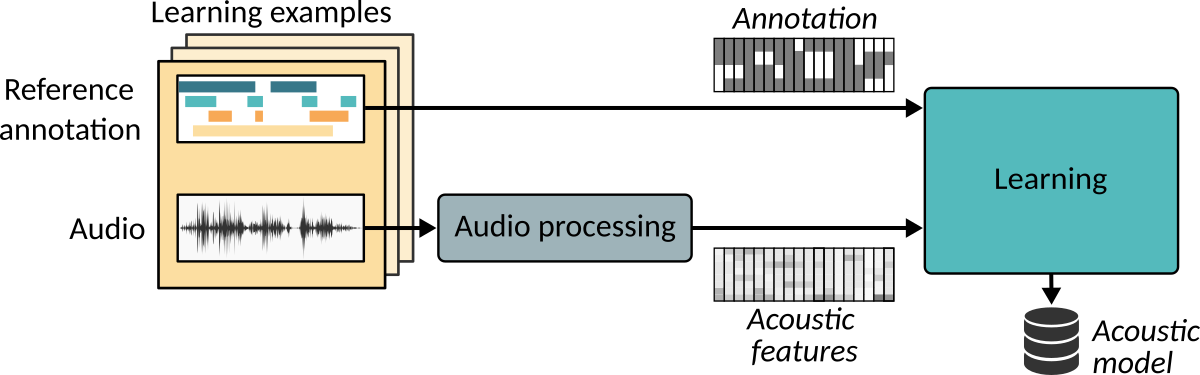

Reference Annotations

During the system training stage, the acoustic features extracted in the audio processing block are used along with reference annotations. For the sound classification task, the reference annotations contain only information about the presence of target sound classes in each learning example, whereas for the sound event detection task onsets and offsets of these sounds may also be available.

Learning

In the learning block, machine learning techniques are used to automatically learn the mapping between acoustic features and class labels defined in the reference annotations. In literature, the learned mapping is referred to as an acoustic model.

Recognition

In the recognition block, the previously learned acoustic models are used to predict class labels for new and previously unseen input audio signal. Depending on the application type, the system is doing either classification, tagging, or detection.

In the following sections, the data acquisition for the system development, and the techniques used in the processing blocks are described in detail. These sections are partly based on the introductory book chapter [Heittola2018] about machine learning approaches for analysis of sound scenes and events published in [Virtanen2018].

Data Acquisition

Audio data and annotations describing the content of this audio data form together an audio dataset suitable for the development of the content analysis system. Data has a critical role in the development of systems based on machine learning techniques, as the performance level of such systems is strongly dependent on the quality and quantity of the data available during the development. Machine learning techniques used in the analysis rely on labeled data to learn parameters of the acoustic models and to evaluate the performance of these acoustic models for the given analysis problem. Acquiring suitable training and testing data is generally the most time-consuming stage of system development.

The target application defines the type of data needed for system development. Generally the aim is to collect acoustic material in conditions which are as close as possible to the envisioned use case of the analysis system. The collected material should contain a selection of representative examples of all sound classes targeted in the system. For example, a good material to develop a robust dog barking detector targeted for home surveillance applications use should contain material recorded in various environments related to home (indoor and outdoor, and varying room size, etc.), with a wide selection of dogs from different dog breeds (from small to large-sized) barking in as natural as possible setting with varying dynamics.

Audio

Most sound sources present in everyday environments have internal variations in their sound-producing mechanism which can be perceived as differences in the produced sound. This leads to high intra-class variability which has to be taken into account when collecting the audio material. The audio examples should be selected to provide good coverage and variability in sufficient quantity [Mesaros2018, p. 149]. Coverage ensures that the material contains examples from all relevant sound classes to the target application, whereas variability ensures that for each class there are examples captured in variable conditions with various sound-producing instances. Sufficient quantity of examples fulfilling the coverage and variability criteria enables the machine learning techniques to learn robust acoustic models that generalize, i.e., perform well on sound examples that were not encountered in the learning [Goodfellow2016, p. 107]. More specifically, audio material for the development of an acoustic scene classification system should contain recordings from many locations belonging to the same scene classes, whereas material for the development of a sound event detection system should contain multiple sound instances from the same sound event class, recorded in variable conditions.

Variability

The variable conditions are characterized by the properties of acoustic environment (e.g. size and the shape of acoustic space, type of reflective surfaces), the capturing microphone and device, the relative placement of the sound source and the microphone, and interfering noise sources present. In realistic usage scenarios, all of the condition variations cannot be taken into account explicitly in the data collection. If the collected material represents only a subset of the possible conditions, this can cause a mismatch between the material used to develop the system and the material encountered in the real usage stage, which eventually leads to poor performance. Hence in the data collection it is advisable to make extra effort to minimize this data mismatch by capturing as representative set of data as possible, under all identified conditions. When material captured under variable conditions is used in the learning stage, the system is said to use a multi-condition training approach [Li2016, p. 116]. Collected audio data can be diversified for the multi-condition training by adding artificially different impulse responses to it in the training stage [Zhao2014], since many of the variable conditions are reflected in the impulse response which characterizes the overall acoustic characteristics of the captured audio signal [Li2016, p. 206]. This approach requires obtaining recordings of the target source with as little external effects as possible and then convolving the audio signals with measured impulse responses from various real acoustic environments. The room impulse responses can be generated also with room simulation techniques [Zolzer2008, p. 191].

Interfering Noise Sources

Interfering noise sources can be handled similarly to acoustic conditions. In usage cases where potential noise sources are known and stationary, the data can be easily collected under similar conditions. However, in cases where the types of noise sources are varying or the relative position of the noise source with respect to the capturing microphone varies, data collection under all matched conditions is impractical. Depending on the level of variability, one can be still successful by collecting material as diversely as possible and using a multi-condition training approach. Another feasible approach is to obtain recordings of target sound sources without any interfering noise and recordings with the noise sources alone, and simulate noisy signals by artificially mixing these with various signal-to-noise ratios (SNR) [Mesaros2019a]. The artificial mixing approach can potentially produce larger quantities of relevant training material than a direct recording approach. On the other hand, the diversity of the available recordings influences and limits the variability of the artificially produced material.

Annotations

Supervised machine learning approaches require labeled sound examples, i.e., audio data with reference annotations. In the annotation procedure, portions of the acoustic signal containing target sound categories are indicated and stored in some machine-readable format. Manual annotation is done through audition, having persons carefully listening to the audio and indicating the activity of each class; because of this, the manual annotation process is one of the most time-consuming stages of the system development. Before the data collection process, the sound categories are selected based on the target application, and the textual labels assigned to these categories are defined to guide the selection of recording locations and situations. Selected textual labels should be representative and non-ambiguous, i.e., a label should allow understanding the sound properties based on the label alone in an explicit way [Mesaros2018, p. 152].

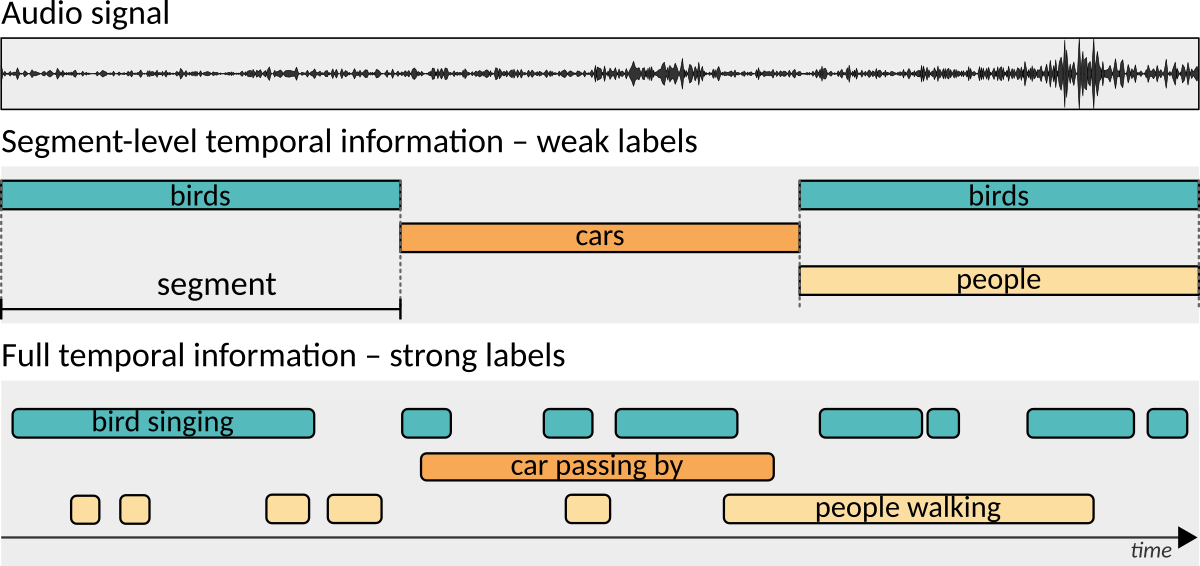

Audio content can be annotated at a fixed temporal grid, by annotating sound activity inside equal-sized and non-overlapping segments [Foster2015], or with detailed temporal information, by annotating the exact start and end times for the sound activity [Mesaros2010, Heittola2013a, Mesaros2016]. The annotations are strong when they have start and end times for the sound activity and weak when the temporal information is approximated at a coarse level (up to signal length). The most complex form of annotation for environmental audio is polyphonic sound event annotation, where multiple, overlapping sound events are annotated with strong labels [Mesaros2016]. The different annotation types are illustrated in Figure 3.3.

Figure 3.3 Annotation with segment-level temporal information and with detailed temporal information.

Depending on the content analysis application type, reference annotations have different requirements as listed in Table 3.1.

| Annotation unit | Temporal information | |||

|---|---|---|---|---|

| Analysis type | Size | Overlap | Type | Typical resolution |

| Classification (ASC) | fixed | no | weak | ≥ 1s |

| Tagging (AT) | fixed | yes | weak | ≥ 1s |

| Detection (SED) | varying | yes | strong | ≤ 1s |

Acoustic Scenes

Audio material for acoustic scene classification is often captured in a fixed position to ensure that the scene category stays the same throughout the recording [Mesaros2016, Mesaros2018b]. This simplifies the annotation process, as the category labels can be assigned for full signals or very long time-segments in it. Category labels should be clearly defined to minimize the subjectivity of the label selection in the annotation process. Examples of scene labels are busy street, office, and traveling by bus.

Sound events

Labeling sound events is a highly subjective process where perception and personal life experience of the annotator have an important role [Gygi2007]. Subjectivity can be controlled to some extent by defining the textual labels for the sound categories before the annotation process and forcing the label selection among these pre-defined labels. This is advisable in applications where the number of target sound categories is low and they are well-defined. In research where the aim is to recognize all sounds in the acoustic scene, or the target application is not defined before the data collection, the labels cannot be defined before actually annotating the audio material. In these cases, the most advisable approach is to allow free label selection during the annotation, i.e., each sound instance will be annotated with a descriptive and possibly a new label, based on the annotator‘s opinion; afterwards labels describing the same sound category can be manually grouped after all material is annotated [Mesaros2010, Heittola2013a, Mesaros2016, Fonseca2018]. The label post-processing stage is essential to make the material usable for supervised machine learning, as freely selected labels often contain typos, different wording (people talking versus people speaking, or synonyms (car versus automobile) for sounds clearly belonging to the same category.

Temporal Segmentation Process

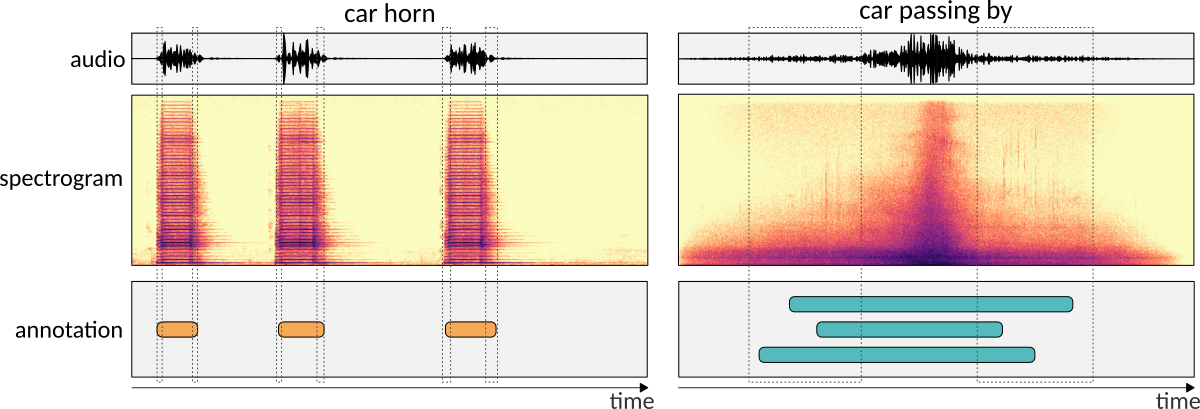

Annotating sound events with full temporal information requires marking the time instance when the sound event is first perceived, and the time instance when the sound event is not anymore perceived. This temporal segmentation process will introduce a varying level of subjectivity to the annotations, depending on the sound event type [Mesaros2018, p. 157]. For sound events which have rapidly increasing and decreasing amplitude envelope, such as car horn, onsets and offsets can be pinpointed reliably with acceptable time resolution (e.g. 100 ms). On the other hand, for sound events which have slowly increasing and decreasing amplitude envelope, onsets and offsets can be sometimes very difficult to pinpoint reliably, especially when interfering background noise is present in the acoustic signal. Two such examples are illustrated in Figure 3.4.

Figure 3.4 Annotating onset and offset of different sounds: boundaries of the sound event are not always obvious.

Datasets

Once the audio material is packed together with annotations, it forms an audio dataset usable for the content analysis development. It is useful if the dataset has additional metadata describing the recording equipment (e.g. microphone model, capturing device), recording time and location (e.g. address, GPS coordinates), and properties of the acoustic environment during recording (e.g. weather conditions, room size when indoor). This metadata has an important role in the creation of the cross-validation setup for the development when one creates balanced training, testing, and validation sets with respect to various properties of the data. For example, one should take extra care not to include recordings from the same exact location into training and testing sets, as this will potentially lead to over-optimistic performance estimates. Another example of the usage of the metadata is the creation of a cross-validation setup such that all sets contain a representative selection of recordings from different weather conditions.

For published datasets, it is good to follow a consistent file naming convention for a clear correspondence between audio recordings, related annotations, and metadata, to use easily accessible machine-readable file formats, and to include a cross-validation setup. A cross-validation setup supports a direct comparison of studies using the dataset, which is important for its usability and for reproducible science. More information about reference datasets for sound event detection can be found in Section 4.2

Audio Processing

In the audio processing stage of the content analysis system (see Figure 3.2), the audio signal is prepared and processed for the subsequent machine learning stage. The audio processing stage consists of pre-processing and acoustic feature extraction. In pre-processing, the audio signal is processed to reduce the effects of interfering noises or to emphasize the target sounds. In acoustic feature extraction, the audio signal is transformed into a compact representation suitable for machine learning algorithms.

Pre-processing

The aim of pre-processing is to enhance the characteristics of the audio signal that are essential for robust content analysis. The requirements for this processing block depend on the characteristics of the acoustic environment and the target sound categories, as well as the type of acoustic feature extraction and machine learning methods used. Pre-processing is generally applied to the audio signal before acoustic feature extraction, and prior knowledge about the usage environment and the distinctive characteristics of the target sound categories is utilized when designing or selecting the pre-processing algorithm. For example, if stationary noise is present in the operation environment, noise suppression techniques can be used to reduce the interference of noise to the analysis [Schroder2013].

Everyday environments usually have multiple overlapping sound events active at the same time. The recognition of overlapping sounds can be addressed at different stages of the analysis system: at the signal pre-processing stage by using sound source separation [Heittola2013b], at acoustic modeling level by modeling all sound combinations [Butko2011a, Temko2009], at detection level by using multiple iterative detection passes [Heittola2013a]. Recently introduced deep neural network based approaches use large amounts of data to learn and recognize sounds regardless of the interference introduced by the overlapping sounds at the acoustic model level [Cakir2017].

Sound Source Separation

Audio captured in our everyday environments consists of sounds produced by various sound sources having distinctive structure in time and frequency. Sound sources can be considered to correspond to sound events in the acoustic scene, sometimes multiple sound sources belonging to the same event. The aim of sound source separation is ideally to decompose a given audio signal, mixture signal, with multiple simultaneous active sound sources into individual sound sources.

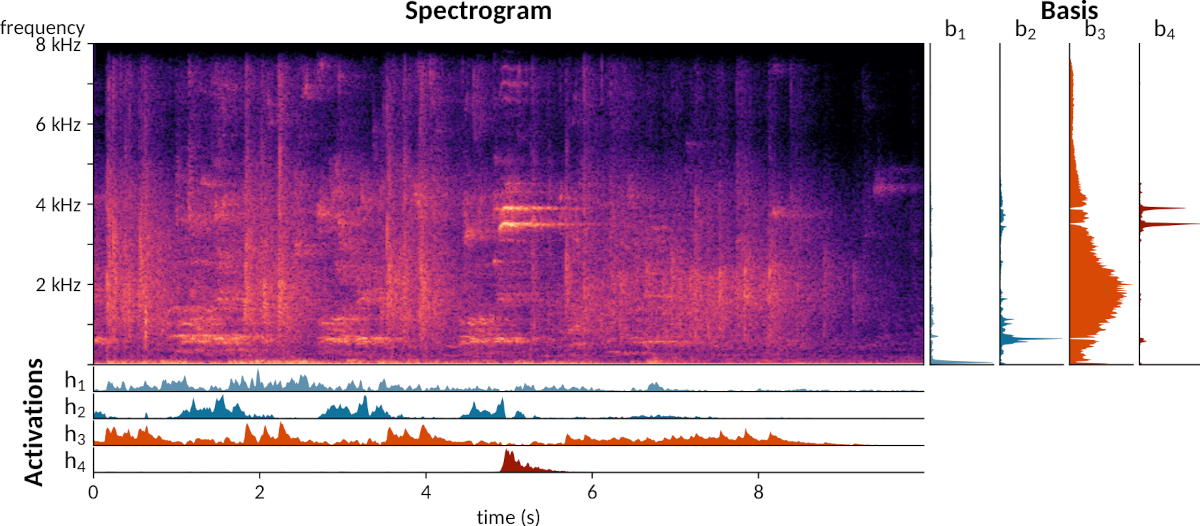

Non-Negative Matrix Factorization

One commonly used method for sound source separation is based on non-negative matrix factorization (NMF) [Virtanen2007]. NMF models the structure of the sound by representing the spectra of the mixture signal as a sum of components, each having a fixed magnitude spectrum and a time-varying gain. The assumption in NMF-based source separation is that each sound source has a characteristic spectral structure that differs from other sources present in the mixture signal, and ideally each source can be modeled using a distinct set of fixed magnitude spectra. In the signal model, the magnitude spectrum vector \(\boldsymbol{x}_{t}\) in frame \(t\) is defined as a linear combination of basis spectra \(\boldsymbol{b}_{k}\) (fixed spectrum) and corresponding \(h_{k,t}\) activation coefficients (gain). This can be expressed as:

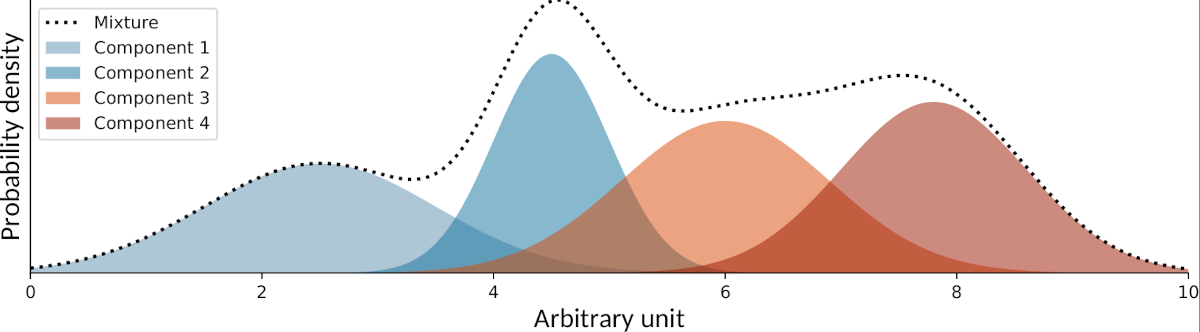

$$ \boldsymbol{x}_{t}\approx\sum_{k=1}^{K}\boldsymbol{b}_{k}{h}_{k,t} \tag{3.1} $$where \(K\) is the number of components, and \(h_{k,t}\) is the activation coefficient of the \(k\) th basis spectrum in frame \(t\). One basis spectrum and its activation coefficients are referred to as a component. Often NMF is used in an unsupervised manner without any prior knowledge of which components represent the given sound source. Ideally sound sources in the mixture signal become represented as a sum of one or more components, however, it is possible that the resulting components contain parts from multiple sound sources. This is considered as learning-free sound separation, and it gives a good separation performance in cases where the characteristics of the sound sources are distinctive. Figure 3.5 shows the spectrogram of an audio signal captured during a basketball game and the results of factorization into four components. In this example, the first component captures the squeaking sounds of basketball players’ shoes and residual audience sounds, the second component captures shouting from the audience, the third component captures applause, and the fourth component captures the whistle sound of the basketball referee.

Figure 3.5 Sound source separation with NMF into four components applied to a recording captured in a basketball game.

The component-wise audio streams can be reconstructed by generating a time-frequency mask \(\boldsymbol{w}_{k}\) from basis spectra and activation coefficients, and filtering the mixture signal with it. The time-frequency soft mask for component :\(j\) is defined as

$$ \boldsymbol{w}_{j}=\frac{\boldsymbol{b}_{j}\boldsymbol{h}_{j,t}}{\sum_{k=1}^{K}\boldsymbol{b}_{k}\boldsymbol{h}_{k,t}}. \tag{3.2} $$The mask can be considered as a time-varying Wiener filter which separates the signal into a stream containing approximately homogeneous spectral content that differs significantly from the other streams. The outputted streams do not represent individual sound sources, but they are a combination of the sources present in the original mixture signal.

Acoustic feature extraction

The main purpose of feature extraction is to transform the acoustic signal into a compact numerical representation of the content in a way that is relevant to machine learning and maximizes the recognition performance of the sound analysis. Important information for the content analysis of audio signals is mainly contained in the relative distribution of energy in frequency. For this reason, regularly used acoustic features in audio content analysis are based on the time-frequency representation of the signal. The discrete Fourier transform (DFT) is the most commonly used transformation for audio signals. It represents the signal using sinusoidal base functions, each being defined by magnitude and phase [Oppenheim1999]. Other transformations used for audio signals include constant-Q transform (CQT) [Brown1991] and discrete wavelet transform (DWT) [Tzanetakis2001].

Processing stages

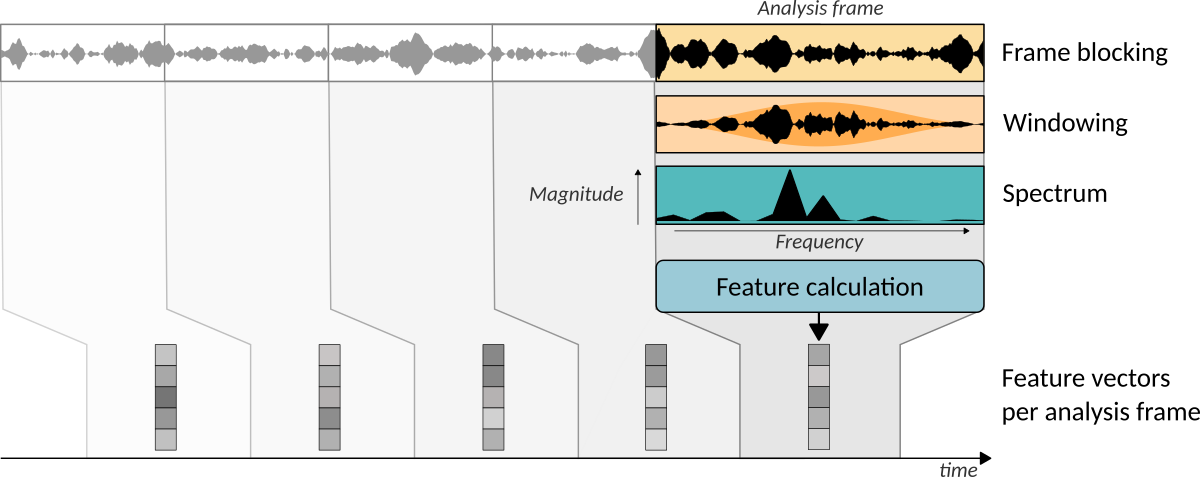

Audio signals can generally be assumed to be non-stationary because of their rapidly changing signal statistics (e.g. magnitudes of the frequency components). This requires acoustic feature extraction in short time segments, analysis frames, which contain signal in a quasi-stationary state. The basic stages in acoustic feature extraction are frame blocking, windowing, spectrum calculation, and subsequent analysis, as illustrated in Figure 3.6.

Figure 3.6 The processing pipeline of acoustic feature extraction.

In the frame blocking stage the audio signal is split into fixed-length analysis frames, which are shifted with a fixed time step (feature hop length). When using Fourier transform, the length of the analysis frame is related to frequency resolution: longer frames will give better frequency resolution than shorter frames, but at the same time the temporal resolution of the analysis is lower with longer frames. Usually for environmental audio analysis, the frame length is set between 20 and 100 ms with 25 - 50% overlapping frames. Sound event detection systems use shorter analysis frames (e.g. 20 ms in ) than acoustic scene classification systems, as spectral characteristics of sound events are changing more rapidly than the general characteristics of acoustic scenes, and good time resolution is required for detecting event onsets and offsets accurately. In order to avoid abrupt changes at the frame boundaries causing distortions in the spectrum, the analysis frames are smoothed with a windowing function such as Hamming or Hann function. The windowed analysis frames are transformed into a spectrum, forming a time-frequency representation, and acoustic features are extracted from it.

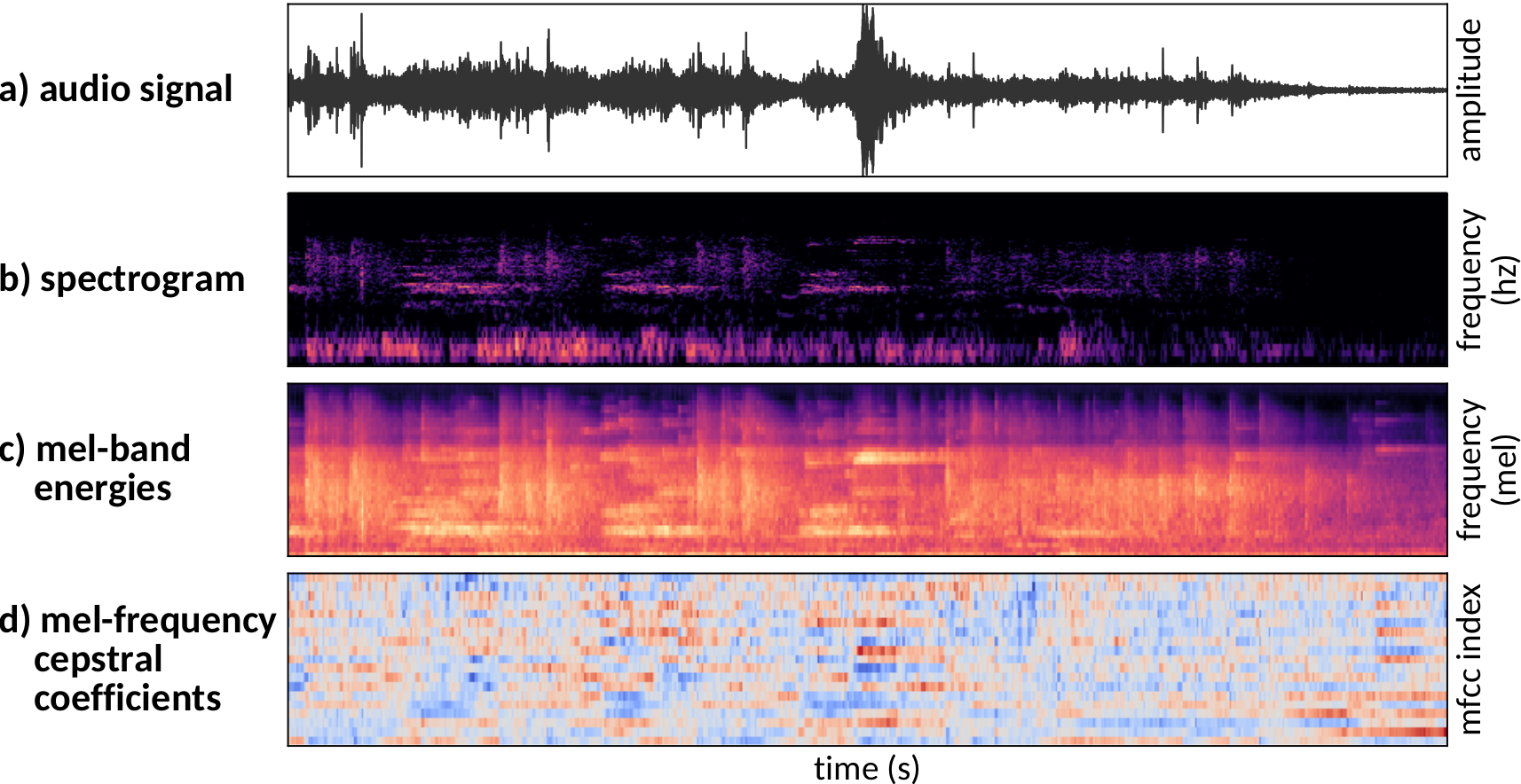

Until recently, the most common approach to develop acoustic features have been carefully engineering features from the time-frequency representation and using expert knowledge about acoustics, sound perception, sound classes, and their differences while developing features. These types of features are often called hand-crafted features. Recently, automatic feature learning techniques have also been used with increased dataset sizes [Salamon2015, Cakir2018]. These techniques produce high-level feature representations given the data and specified task, and have shown impressive performance compared to hand-crafted features. The main advantage of feature learning over feature engineering is that no specific knowledge about the target task is required. This thesis only discusses hand-crafted acoustic features, and the most common hand-crafted features extracted from the spectrum, mel-band energies and decorrelated mel-band energies called mel-frequency cepstral coefficients (MFCCs) [Davis1980]. These features are illustrated in Figure 3.7 for an audio example.

Figure 3.7 Acoustic feature representations.

Mel-band energies

Mel-band energies are a perceptually motivated representation based on mel-scaled frequency bands. The aim of the mel-scale is to mimic the non-linearity of human auditory perception, by having narrower bands at lower frequencies than at higher frequencies. The scale has been created through listening experiments, having listeners listen to two alternating sinusoids and adjusting one of them to have a perceived pitch half to the other one [Stevens1937]. The resulting mel-scale is approximately linear up to 1 kHz, after which it is approximately logarithmic. The relation between mel and linear frequency can be approximated as [OShaughnessy2000, p. 128]

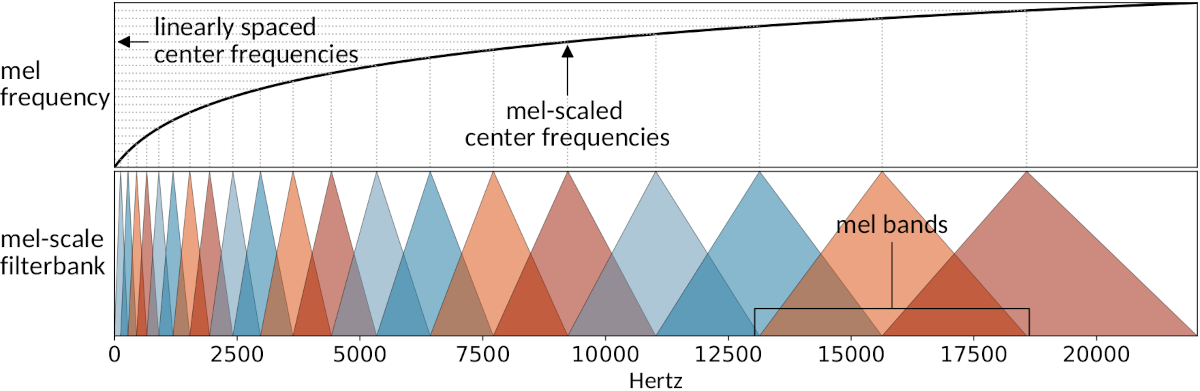

$$ F_{mel}=2595log_{10}(1+\frac{F_{Hz}}{700}). \tag{3.3} $$A mel-scale filterbank consists of overlapping band-pass filters (typically 20, 40, 64, or 128 filters in total) having triangular frequency response and with filters’ center frequencies linearly spaced on the mel scale. Figure 3.8 illustrates the process of constructing such a filterbank; the relation between center frequencies of the filters in hertz and mels is shown in the top panel, and the triangular filters are shown in the bottom panel. Many alternative filterbank implementations have been proposed in the literature throughout the years, mostly varying in how the nonlinear pitch perception of humans is approximated in the filterbank design [Davis1980, Slaney1998, Young2006].

Figure 3.8 Mel-scaling (top panel) and mel-scale filterbank with 20 triangular filters (bottom panel).

The mel-scale filterbank is applied on the spectrum (either magnitude or power spectrum) to obtain energy per mel-band. The resulting representation is called a mel spectrogram. Following humans’ logarithmic perception of sound loudness, the dynamic range of the energy values per band is compressed by taking the logarithm of these values. The resulting features are called log mel energies in the literature. This acoustic representation retains the coarse shape of the spectrum, while the fine structure related to the harmonic structure of the signal is smoothed out. This is beneficial because the identity of everyday sounds is not determined based on the exact perceived pitch, and some of the sources do not even have any harmonic structure. The process is shown in Figure 3.7; panel b shows the spectrogram of the signal, and panel c shows corresponding mel-band energies.

The mel-scale filterbank was originally designed for speech analysis, and to be used as a processing block for MFCCs features. Later, as MFCCs were shown to perform robustly in more general sound classification tasks such as speaker recognition [Reynolds1995], music genre recognition [Tzanetakis2002], and musical instrument classification [Heittola2009], they gained popularity also as a standard feature for acoustic scene classification and sound event detection tasks. MFCCs are calculated by decorrelating the outputs of the mel-scale filterbank with a linear transform, the discrete cosine transform (DCT) to allow efficient data modeling in Gaussian mixture models and hidden Markov models by enabling the usage of a diagonal covariance matrix in Gaussian distributions. Along with the emergence of deep learning based approaches the decorrelation step has been dropped out because modern deep learning techniques can efficiently take advantage of correlated information in the data during the learning process. Currently, log mel energies are the most commonly used acoustic features in deep learning based approaches for the content analysis of environmental audio [Cakir2017, Mesaros2018b, Mesaros2019a].

Mel-frequency cepstral coefficients

Mel-frequency cepstral coefficients (MFCC) represent the output of the mel-scale filterbank in the cepstral domain. MFCCs are obtained from the previously described log mel energies by computing type-II discrete cosine transform (DCT). An example of MFCCs is shown in Figure 3.7, panel c.

The role of this added processing step is two-fold. Firstly, the DCT is used to decorrelate feature values, since the filterbank outputs are heavily correlated due to neighboring filters overlapping in frequency. Decorrelated values enable usage of a diagonal covariance matrix in Gaussian distribution based acoustic models. Secondly, by keeping only the first few values of DCT (coefficients), the spectral representation is smoothed and the dimension of the feature vector is reduced. The first coefficients contain information about the overall shape of the spectrum, while higher coefficients contain information about the fine structure of the spectrum. The amount of coefficients retained for analysis varies between 12-20 depending on the target application requirements; in speech recognition 8-12 coefficients are sufficient to represent the coarse shape of the spectrum; in musical instrument recognition usually a higher number of coefficients (e.g. 20) are used to capture fine details of the spectrum [Heittola2009], while in environmental audio usually 16-20 coefficients are used . The first MFCC (zeroth coefficient) is related to signal energy (log energy), and depending on the application this information is either retained or omitted from the feature vector. Signal energy is closely related to acoustic scene class, e.g. park is quieter than a street with cars, and because of this, the signal energy information is retained in acoustic scene classification applications. Sound events can be regarded as having the same source regardless of loudness, and thus the zeroth coefficient is often omitted from the feature vector in sound event detection applications.

Dynamic features

The audio is a time-variant signal and one of the main characteristics for sound identification is its dynamic change over time. However, many acoustic features such as mel energies and MFCCs, estimate only the instantaneous spectral shape. The temporal evolution of the acoustic features can be dealt at the feature level by adding dynamic features to the final feature vector or by modeling the temporal aspect in the acoustic modeling stage (e.g. using recurrent layers in neural networks) [Cakir2017].

To incorporate the temporal evolution of the features into the acoustic feature vector, one can use estimates of the local time derivatives of the features by approximating with a first-order orthogonal polynomial fit [Furui1981] as

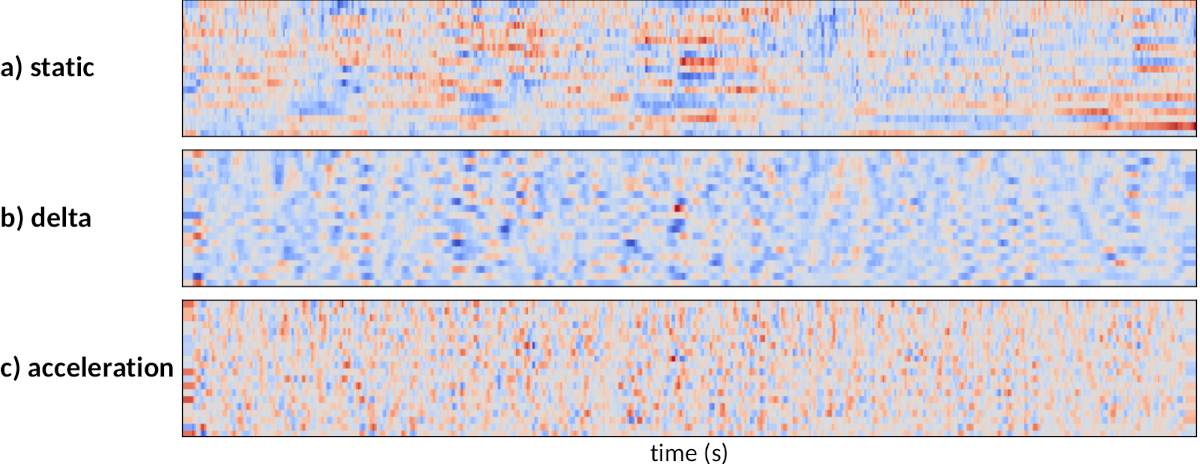

$$ \Delta c\left(i,u\right)=\frac{\sum_{k=-K}^{K}k\cdot c\left(i,u+k\right)}{\sum_{k=-K}^{K}k^{2}} \tag{3.4} $$where \(c\left(i,u\right)\) denotes the \(i^{th}\) feature value in a time frame \(u\) [Rabiner1993, p. 116–117]. Computation is performed over \((2K+1)\) frames, and \(K\) is typically set to either three or four. The resulting dynamic features are commonly referred to as delta features, opposite to the static features. In addition to the delta features, the second derivatives can be computed by applying the same polynomial fit to the already computed delta features, resulting in a parabolic fit. These features are referred to as delta-delta or acceleration features. Delta and acceleration features are used together with static features to integrate the dynamic aspect of the spectrum to the feature set . An example of the static and dynamic features is shown in Figure 3.9.

Figure 3.9 Static and dynamic feature representations.

Another method to incorporate temporal information into the feature vector is to concatenate consecutive frames within a window into a single vector, a supervector, [Gencoglu2014, Cakir2015a, Mesaros2019a]. The idea is to provide contextual information by stacking together \(N_{win}\) frames before and after the current frame. The length of the newly constructed feature vector is defined as \((2\times N_{win} + 1) \times N_{feat}\), where \(N_{feat}\) denotes the length of the original feature vector. This method is often used together with fully connected feed-forward neural networks.

Other features

In addition to the previously discussed features, numerous other features derived from the time-frequency representation of a signal have been proposed for computational audio content analysis.

Low-level features describing specific aspects of the spectral shape of a signal are traditionally used as part of a larger feature set together with MFCCs as they are not powerful enough to be used alone. Common low-level features that describe spectral shape include signal energy, spectral envelope, spectral moments (e.g. spectral centroid and flatness), spectral slope, spectral roll-off, spectral flux, and spectral irregularity [Eronen2006, Geiger2013].

Spectral descriptors adapted from image processing have shown competitive performance compared with traditional features, especially in the case of acoustic scene classification. These descriptors are treating the spectrogram as an image and use techniques adapted from computer vision to characterize the shape, texture, and evolution of the content in it. To detect different shapes in the spectrogram, one can use a histogram of oriented gradients (HOG) features [Rakotomamonjy2015], and to characterize textures in the spectrogram one can use local binary pattern (LBP) features [Kobayashi2014, Battaglino2015]. Subband power distribution (SPD) transforms the spectrogram into representation characterizing the spectral power distribution over time at frequency subbands [Dennis2013].

Automatic representation and feature learning techniques have become recently increasingly popular for acoustic scene classification and sound event detection facilitated by the availability of larger high-quality datasets. Sounds occurring in everyday environments have substantial diversity and this results in a widely varying set of time-frequency structures, however, only a subset of the information in the spectrogram is relevant for actual classification. The aim of feature learning is to learn a representation that reflects this relevant information, and is accomplished using different techniques, including deep learning [Hershey2017]. Features created through this process are commonly referred to as embeddings. The input for the feature learning network can be some established time-frequency representation or even raw acoustic waveform [Kim2019].

Supervised Learning and Recognition

Machine learning techniques used in audio content analysis rely on data to learn the parameters of the acoustic models. In a supervised learning approach, manually labeled sound examples are used to teach a classifier the mapping between the extracted acoustic features and given sound categories. The learned acoustic model is then used to assign category labels to acoustic features of the test data. The difficulty of the classification task depends on the inter-class and intra-class variability of sound categories. When doing sound classification or detection of everyday sounds, the used learning algorithm has to cope with overlapping sounds and take into account the temporal structure of sounds. Depending on the target task, the classification can be formulated as a multi-class single-label or a multi-class multi-label problem. In the multi-class single-label problem, only one category out of many possible categories is active at a given time instance, whereas in the multi-class multi-label problem multiple categories can be active simultaneously at a given time instance.

Learning

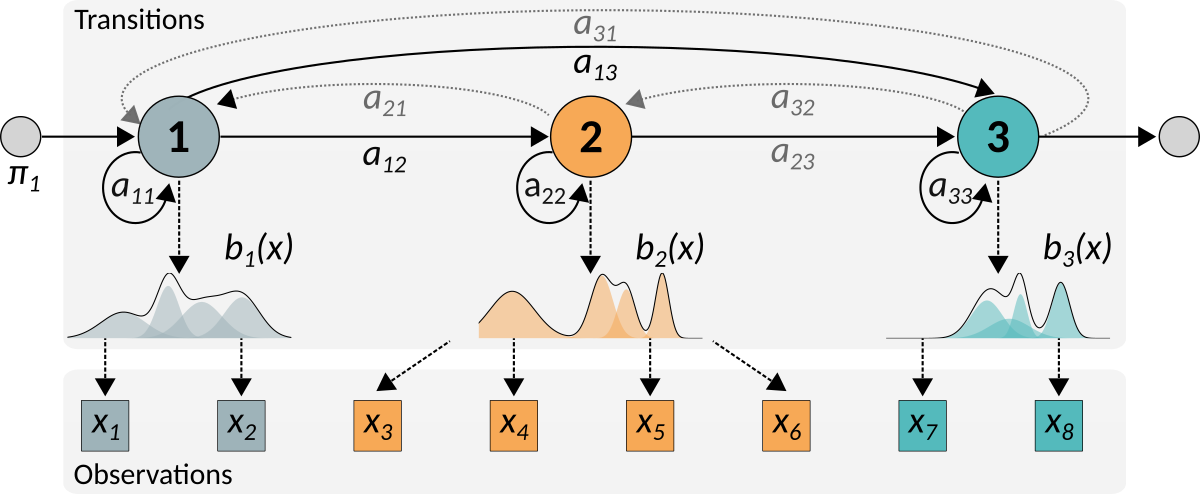

In the learning stage, the aim is to learn an optimal model to classify sound examples into one of the predefined sound categories in a given feature space. The learning examples are pairs of inputs to the system, acoustic features \(\mathbf{x}_t\) extracted in time instances \(t=1,2,...T\), and desired target outputs \(\mathbf{y}_t\) for those particular inputs. The target output contains the information about the sound class assigned to the input data, one of possible \(C\) classes, \(c_i,i=1,2,...C\). An overview of the learning process is illustrated in Figure 3.10.

Figure 3.10 Overview of the supervised learning process for audio content analysis. The system implements a multi-class multi-label classification approach for sound event detection task.

The acoustic model partitions the acoustic feature space with a decision rule into regions with an assigned class label, and one class label can have multiple disjoint regions associated with it. Recognition is done based on these regions: the observed acoustic features are classified based on which region they fall into. The boundary between regions is called decision boundary, and generally most of the misclassifications happen near this boundary. Model learning is guided by the errors or loss between the target outputs and estimated outputs from the training examples, and the model parameters are updated through optimization techniques to decrease this error.

The basic assumption behind the learning process is that the test examples will be similar to the training examples, i.e., test example are coming from the same distribution as training examples. The model should be able to robustly estimate the correct output in this situation based on the learned decision rules. In practice, it is very hard to collect a representative set of training examples to cover all potential variability of test data. The generalization to unseen examples is the main property of a good classifier, and failing in this is caused by model overfitting or underfitting. Everyday sounds mostly appear in noisy multi-source situations and have large intra-class variability that leads to high variations in acoustic characteristics (see Section Data Acquisition). Therefore it is challenging to achieve good generalization based on a limited set of training examples. When overfitting, the model has learned peculiarities of the training examples rather than general acoustic characteristics which would generalize well on unseen test examples. This can be caused by a limited set of training examples, or having a too expressive model, e.g. too many parameters given the training material size. When underfitting, the model was not complex enough or not trained sufficiently to be able to robustly classify unseen test examples.

Generally, supervised learning approaches can be categorized into two main types: generative and discriminative. In generative learning, the underlying class-conditional probability density is estimated explicitly based on learning examples. For each sound class the joint distribution \(p(x,y)\) is modeled separately, and the Bayes’ rule is used to find the most probable class from which the input was generated by finding the maximum posterior probability \(p(y|x)\). Commonly used classifiers for audio content analysis that follow the generative learning approach include Gaussian mixture models (GMM), and hidden Markov models (HMM). In discriminative learning, the modeling concentrates on the boundaries between classes instead of the classes themselves. Data examples are used directly for defining the decision boundaries and finding a direct mapping between inputs to the system and the target outputs [Ng2001]. Examples of discriminative learning approaches used for audio content analysis include decision trees, support vector machines (SVM), and neural networks. The work in this thesis concentrates on approaches based on generative learning . Recently, the discriminative learning approaches have gained popularity in audio content analysis primarily because of advancements of deep learning and neural networks, and increased size of available datasets for learning [Cakir2017, Mesaros2019a].

Recognition

Once the acoustic model is learned, it can be used for classifying sounds into predefined sound classes. An overview of the recognition process is illustrated in Figure 3.11. In the audio processing stage, the acoustic features are extracted for the test audio. The acoustic features are fed as input to the recognition stage, which uses the learned acoustic models to get class-presence information (probabilities or likelihoods depending on the method) for each input feature frame. In the post-processing stage, the class-presence probabilities are converted into class activity, a binary indication of a class being present or not within the current analysis frame. Depending on the target recognition task, the output format of the post-processing will be different. Classification methods can be used for detection by doing classification in short time segments (e.g. one second). The detection task aims to produce classification results in a sufficiently high time resolution which can be processed into onset and offset timestamps describing sound event activity, i.e., sound event.

Figure 3.11 Overview of the recognition process for audio content analysis.

Classification

In sound classification, class-presence information of multiple short analysis frames from an audio segment to be classified are combined into a single classification output. In a single-label classification task, a single class label is assigned to an item, whereas in multi-label classification, multiple category labels are assigned to a single item.

Frame-level class-presence probabilities can be processed into a single-label classification output either by taking classification decisions at frame-level and combining decisions (hard voting) or by combining probabilities and making the decision at segment-level (soft voting). In the first approach, classification decisions are done at the frame-level by selecting the class with the highest probability, and performing majority voting over these frame-level estimates to get the class with the highest number of occurrences [Mesaros2019a]. In the latter approach, the class-presence probabilities are combined either by summing or by averaging, then the class with the highest probability is selected as the classification result [Mesaros2018c]. This approach often results in a higher classification accuracy than hard voting at frame-level, because it gives more weight to more confident estimates.

In multi-label classification, the number of active classes is usually unknown, and one cannot do classification just by selecting the class with the highest probability. The single-label classification scheme can be extended into a multi-label classification either by modifying how the system output is interpreted into activity or by adding extra classes to capture cases when target sound classes are not active. In the first approach, class presence probabilities from frames are summed or averaged similarly to the soft voting scheme, and a probability threshold is used to select the active classes. Probability thresholding to get activity estimates is called binarization and it is a common procedure with neural networks [Cakir2017, Mesaros2019a]. In the second approach, the non-activity of the class is explicitly modeled by introducing extra classes. One extra class, universal background model (UBM), can be added to represent the case when no target sound is active [Heittola2013a]. Another way to model non-activity with extra classes is to create class-wise classifiers such that each of these classifiers is able to recognize only the activity of that particular sound class [Cakir2015b]. Binary classifiers are trained with two classes: a positive class, when the specified sound is active, and a negative class when the specified sound is not active.

Detection

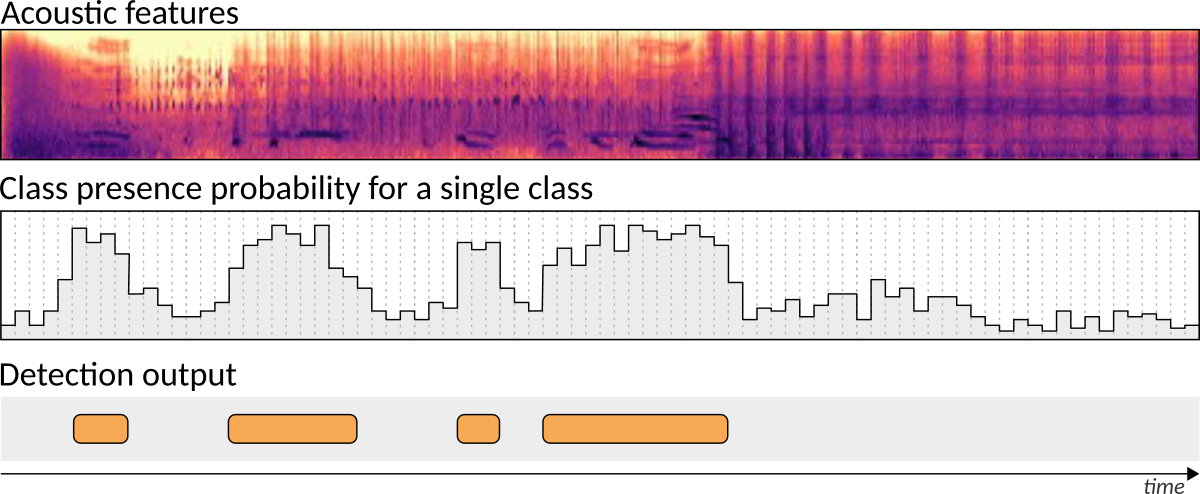

In sound event detection, class presence probabilities of consecutive analysis frames are converted into sound activity information, onset and offset timestamps of the active sound events. The process is a multi-class multi-label classification task at frame-level, and the aim is to process the classification output into estimates of sound event activity. The process is illustrated in Figure 3.12.

Figure 3.12 Converting class presence probability (middle panel) into sound class activity estimation with onset and offset timestamps (bottom panel).

Generally, sound events are much longer than the used analysis window (typical length 20-100 ms) making classification outputs of consecutive analysis frames correlated. Furthermore, everyday sounds have a characteristic temporal structure which is important for the recognition, and this structure spans several analysis frames. The temporal structure is taken into account in the detection stage by using temporal post-processing applied to the frame-wise class presence probabilities or the binary class presence estimates. The classification at frame level produces noisy results because feature distributions of sound classes overlap each other and short frames do not have enough information for robust classification. Median filtering using a sliding window can be used to filter out this classification noise and to smooth the results from consecutive frames [Mesaros2018c, Mesaros2019a]. In this process, the median of binary sound activity estimates within the processing window (e.g. one second worth of analysis frames) is outputted, and the window is shifted one frame forward. The temporal structure of a sound can be addressed in the acoustic model as well by incorporating contextual information in the classification, e.g. using recurrent layers in neural networks [Cakir2017], which makes post-processing in such approaches less important.

Methods

This section introduces two classification techniques used in this thesis for audio content analysis: Gaussian mixture models, and hidden Markov models. In addition, state-of-the-art classifier techniques based deep learning and specifically deep neural networks are briefly introduced.

Gaussian Mixture Model

The Gaussian mixture model is a probabilistic model that can be used to estimate normally distributed sub-populations within the training data in an unsupervised manner. It can be extended into a multi-class supervised classifier by fitting a separate GMM model for training data coming from a specific sound category. In the test stage, class-wise likelihoods (posterior probability \(p(y|x)\) are evaluated for an observation, and the class giving maximum likelihood is selected. In sound classification, sub-populations modeled with the mixture model can be seen as variations in acoustic characteristics within a sound category. For example, in the case of a dog barking sound class, the sub-populations can be produced by small, medium, and large dogs with various types of barking as well as different portions of the barking sequence itself.

Before the recent emergence of deep learning, GMM was one of the most commonly used classification methods in sound classification tasks because of its good generalization properties. In automatic speech recognition, it has been used to model individual phonemes, while the transitions from one phoneme to another were modeled with hidden Markov models [Rabiner1993]. In speaker verification, a GMM representing all speakers, a universal background model, was adapted to model the target speaker, with the actual speaker verification done based on the likelihood ratio between the target model and UBM [Reynolds1995]. In music information retrieval, GMMs have been used to classify, for example, musical genre [Tzanetakis2002] and musical instruments [Heittola2009].

A GMM estimates the underlying probability density function (pdf) of the observations (acoustic features), and it is capable of representing arbitrarily shaped densities through a weighted mixture of \(N\) multivariate Gaussian distributions (also called normal distributions) [Pearson1893, Duda1973]. The model sums together multiple Gaussian distributions (components), and the whole model is parameterized by the mixture component weights, and the mean and variance of the individual component distributions. The model can be seen as a clustering algorithm with soft assignments, where each modeled data point could have been generated by any of the component distributions used in the model with a corresponding probability, i.e., each mixture component has some responsibility for generating a data point. The probability density for an observation \(\boldsymbol{x}\) from a class \(k\) is computed as

$$ \label{ch3:eq:GMM:mixture} p_{k}(\boldsymbol{x}) = \sum_{n=1}^{N}\omega_{n}\mathcal{N}(\boldsymbol{x} ; \boldsymbol{\mu}_{n},\boldsymbol{\Sigma}_{n}) \tag{3.5} $$where \(\omega_{n}\) is the positive weight of the component \(n\) and the normal distribution \(\mathcal{N}(\boldsymbol{x} | \boldsymbol{\mu}_{n},\boldsymbol{\Sigma}_{n})\) is defined by the the mean vector \(\boldsymbol{\mu}_{n}\) and the covariance matrix \(\boldsymbol{\Sigma}_{n}\). Component weights \(\omega_{n}\) sum to unity. Figure 3.13 illustrates the basic principle of the mixture model in the one-variate case. The multivariate Gaussian density function is defined as

$$ %\mathcal{N}(\vect{x} | \mu_{n},\Sigma_{n}) %= \frac{1}{\sqrt{(2\pi)^{d}|\Sigma_{n}}|}e^{-\frac{1}{2}(\vect{x}-\mu_{n})^{T}\sum_{n}^{-1}(\vect{x}-\mu_{n})} \mathcal{N}(\boldsymbol{x} ; \boldsymbol{\mu},\boldsymbol{\Sigma}) = \frac{1}{\sqrt{(2\pi)^{d}|\boldsymbol{\Sigma}}|}e^{-\frac{1}{2}(\boldsymbol{x}-\boldsymbol{\mu})^{T}\sum^{-1}(\boldsymbol{x}-\boldsymbol{\mu})} \tag{3.6} $$where \(d\) corresponds to the length of the feature vector.

Figure 3.13 Example of one-variate Gaussian distribution with four components.

Model parameters \(\omega_{n}\), \(\boldsymbol{\mu}_{n}\), \(\boldsymbol{\Sigma}_{n}\) in Eq 3.5 are learned based on the training material for each class separately to allow multi-class classification. The training is implemented using the expectation-maximization (EM) algorithm where optimal model parameters are iteratively estimated [Dempster1977, McLachlan2008]. A latent variable \(\gamma\) is introduced for each data point to indicate the responsibility of each mixture component for generating a particular data point. The EM algorithm alternates between an expectation step and a maximization step: the first estimating the latent variable, the second updating the model parameters to maximize the likelihood based on the estimated latent variable. The process is often initialized by clustering the data with the k-means algorithm. The number of mixture components \(N\) can be optimized as a hyper-parameter using cross-validation, or it can be optimized during the training process as one of the model parameters using information criteria [Figueiredo1999, Heittola2014]. Depending on the level of intra-class variation and the amount of training data, the best performing \(N\) can also vary across classes.

The parameter optimization process is commonly simplified by using diagonal covariance matrices \(\boldsymbol{\Sigma}_{n}\) to restrict the parameter space. This simplifies the calculations, but at the same time prevents the model from capturing correlations between variables. However, the models trained on decorrelated features perform well in practice. One can use decorrelated acoustic features such as MFCC or use e.g. principal component analysis to decorrelate other acoustic features before using them with a classifier.

In the inference stage, the likelihood of an observation to be generated from each class-wise model is estimated using Eq 3.5. The predicted class is selected based on the maximum likelihood classification principle. As discussed earlier, one can use either hard voting or soft voting to get a single-label classification output for an audio segment containing many analysis frames. With GMMs the usual approach is based on the soft voting scheme, where frame-level likelihoods are accumulated class-wise over the whole segment and the predicted class is selected based on these accumulated likelihoods. The aim is to find class \(k\) which has highest likelihood \(L\) for the set of observations \(X=\boldsymbol{x_1},\boldsymbol{x_2},\cdots,\boldsymbol{x_M}\):

$$ \label{ch3:eq:GMM:classification} L(X;\lambda_{k})=\prod_{m=1}^{M}p_{k}(\boldsymbol{x_{m}}) \tag{3.7} $$where \(\lambda_{k}\) denotes GMM for class \(k\) and \(p_{k}(\boldsymbol{x_{m}})\) is the probability density function value for observation \(\boldsymbol{x_{m}}\). The general assumption in this approach is that consecutive analysis frames are statistically independent.

Deep learning

The state-of-the-art computational audio content analysis systems are nowadays almost without exception based on deep learning approaches, and they are showing exceptional performance over classical machine learning approaches. The work included in this thesis was carried out before the era of deep learning and does not utilize any deep learning. However, a brief introduction to deep learning is given here to provide the reader with a modern perspective.

The data processing in deep learning is inspired by information processing in the human brain during the process of acquiring new knowledge and the process of recalling stored knowledge. The main idea of the data processing in deep learning is to model complex concepts, such as sound event classes, by combining simpler and more abstract concepts learned automatically from data. The modeling is done using an artificial neural network, a deep multilayered structure, where each layer is constructed from multiple artificial neurons, and neurons between layers are connected. Deep learning is used for classification as a discriminative learning approach, and robust modelling is ensured by using a large amount of learning examples.

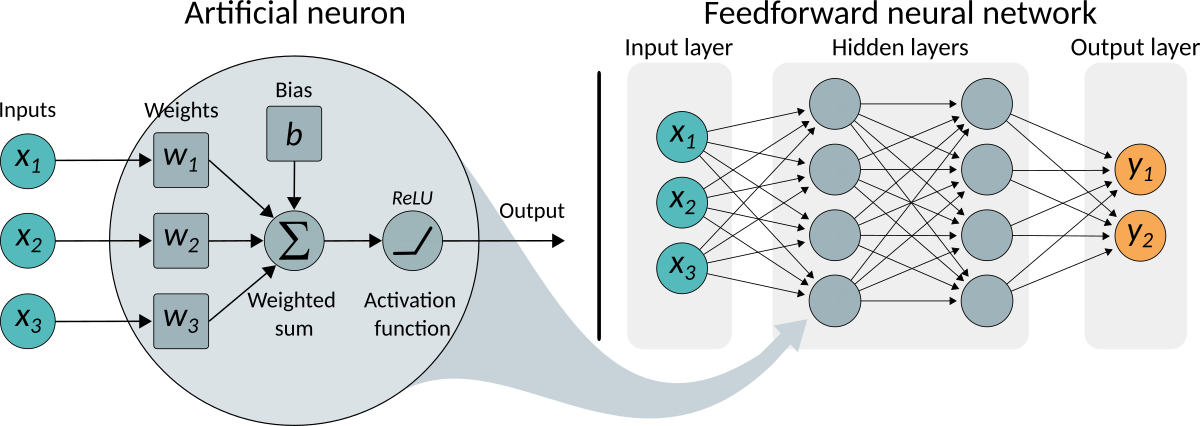

An artificial neuron is an elementary unit of artificial neural networks. A neuron has \(n\) inputs and each input has a weight parameter \(w_i\). The weighted sum of the inputs summed with a constant bias factor \(b\) is passed through an activation function to produce the neuron’s output. By using a non-linear activation function, the neural network can model highly complex relationships in the data. A classical example of an artificial neural network is the feedforward neural network (FNN) where information between layers flows only from the input towards the output without feedback connections [Goodfellow2016]. The basic inner-structure of an artificial neuron and the feedforward network structure is shown in Figure 3.15.

Figure 3.15 Overview of an artificial neuron (left panel) and the basic structure of a feedforward neural network (right panel).

A network consists of an input layer, an output layer, and a number of intermediate layers called hidden layers for which the training data does not provide desired outputs. During the learning process, parameters \(w_i\) and \(b\) for each neuron in the network are iteratively optimized to produce the desired target outputs (reference labels) at the output layer given the input data (acoustic features) to the network. The learning process uses the back-propagation algorithm; at each learning iteration, a loss function is calculated between the output of the network and the target output for the learning examples and this loss is fed back through the network and the network parameters are adjusted to lower the loss for the next iteration. The non-linear optimization method called gradient descent is used to update the network parameters in the opposite direction of the gradient of the loss function after a small set of learning examples (a batch). The activation function for neurons in the output layer is selected based on the classification task; for a single-label classification, softmax activation is commonly used, whereas for a multi-label classification sigmoid activation is used. The hidden layers often use rectified linear units (ReLU) [Glorot2011] as activation function.

The feedforward neural network was the first deep learning approach successfully used for audio content analysis applications, and it showed clear improvement in performance over the established GMM and HMM-based approaches, for example, in acoustic scene classification [Mesaros2019b] and sound event detection [Gencoglu2014, Cakir2015a, Mesaros2019b]. Short temporal context can be taken into account in FNN-based systems by using context windowing at network input (see Section Dynamic Features). Two main problems with FNNs for audio content analysis are their sensitivity to variations in time and frequency due to fixed connections between the input and the hidden layers of the network, and their inability to model long temporal structures essential for the recognition of some sound events.

Convolutional neural networks (CNN) are nowadays chosen over FNNs as they generally produce more robust models [Mesaros2018b, Mesaros2019b]. CNN is a time and frequency shift-invariant model designed to take advantage of the 2D structure of the input [Lecun1998]. This is achieved by utilizing the local connectivity inside the network to allow parts of the network to specialize in different high-level features of the data during the learning process. The final classification output is produced based on the learned high-level features using a few feedforward layers before the output layer. Convolutional recurrent neural networks (CRNN) are often used for sound event detection [Cakir2017, Adavanne2017, Xu2018]. These networks resemble CNNs, but they add layers containing feedback connections that capture long temporal structures in the data.

References

| [Adavanne2017] | Adavanne, S., P. Pertilä, and T. Virtanen. 2017. “Sound Event Detection Using Spatial Features and Convolutional Recurrent Neural Network.” In 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 771–75. |

| [Battaglino2015] | Battaglino, D., L. Lepauloux, L. Pilati, and N. Evans. 2015. “Acoustic Context Recognition Using Local Binary Pattern Codebooks.” In 2015 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), 1–5. |

| [Bishop2006] | Bishop, C. M. 2006. Pattern Recognition and Machine Learning. New York, USA: Springer. |

| [Brown1991] | Brown, Judith C. 1991. “Calculation of a Constant Q Spectral Transform.” The Journal of the Acoustical Society of America 89 (1): 425–34. |

| [Butko2011a] | Butko, T., C. Canton-Ferrer, Segura C., Girò X., Nadeu C., Hernando J., and Casas J. 2011. “Two-Source Acoustic Event Detection and Localization: Online Implementation in a Smart-Room.” In 19th European Signal Processing Conference (EUSIPCO), 1317–21. |

| [Butko2011b] | Butko, Taras, Cristian Canton-Ferrer, Carlos Segura, Xavier Giró, Climent Nadeu, Javier Hernando, and Josep R. Casas. 2011. “Acoustic Event Detection Based on Feature-Level Fusion of Audio and Video Modalities.” EURASIP Journal on Advances in Signal Processing 2011 (1). |

| [Cakir2015a] | Çakır, Emre, Toni Heittola, Heikki Huttunen, and Tuomas Virtanen. 2015. “Polyphonic Sound Event Detection Using Multi Label Deep Neural Networks.” In The International Joint Conference on Neural Networks 2015 (IJCNN 2015). |

| [Cakir2015b] | Çakır, Emre, Toni Heittola, Heikki Huttunen, and Tuomas Virtanen. 2015. “Multi-Label Vs. Combined Single-Label Sound Event Detection with Deep Neural Networks.” In 23rd European Signal Processing Conference (EUSIPCO). |

| [Cakir2017] | (1, 2, 3) Çakır, Emre, Giambattista Parascandolo, Toni Heittola, Heikki Huttunen, and Tuomas Virtanen. 2017. “Convolutional Recurrent Neural Networks for Polyphonic Sound Event Detection.” Transactions on Audio, Speech and Language Processing 25 (6): 1291–1303. |

| [Cakir2018] | Çakir, Emre, and Tuomas Virtanen. 2018. “End-to-End Polyphonic Sound Event Detection Using Convolutional Recurrent Neural Networks with Learned Time-Frequency Representation Input.” In 2018 International Joint Conference on Neural Networks (IJCNN), 1–7. |

| [Casey2001] | Casey, Michael. 2001. “General Sound Classification and Similarity in MPEG-7.” Organised Sound 6 (2): 153–64. |

| [Casey2008] | Casey, M. A., R. Veltkamp, M. Goto, M. Leman, C. Rhodes, and M. Slaney. 2008. “Content-Based Music Information Retrieval: Current Directions and Future Challenges.” Proceedings of the IEEE 96 (4): 668–96. |

| [Davis1980] | Davis, S. B., and P. Mermelstein. 1980. “Comparison of Parametric Representations for Monosyllabic Word Recognition in Continuously Spoken Sentences.” IEEE Transactions on Acoustics, Speech, and Signal Processing 28: 357–66. |

| [Dempster1977] | Dempster, A. P., N. M. Laird, and D. B. Rubin. 1977. “Maximum Likelihood from Incomplete Data via the EM Algorithm.” Journal of the Royal Statistical Society, Series B 39 (1): 1–38. |

| [Dennis2013] | Dennis, J., H. D. Tran, and E. S. Chng. 2013. “Image Feature Representation of the Subband Power Distribution for Robust Sound Event Classification.” IEEE Transactions on Audio, Speech, and Language Processing 21 (2): 367–77. |

| [Diment2013] | Diment, Aleksandr, Toni Heittola, and Tuomas Virtanen. 2013. “Semi-Supervised Learning for Musical Instrument Recognition.” In 21st European Signal Processing Conference (EUSIPCO). |

| [Du2013] | Du, Ke-Lin, and M. N. S. Swamy. 2013. Neural Networks and Statistical Learning. London, UK: Springer-Verlag London. |

| [Duda1973] | Duda, Richard O., and Peter E. Hart. 1973. Pattern Classification and Scene Analysis. New York, NY, USA: John Wiley & Sons. |

| [Eronen2006] | Eronen, A. J., V. T. Peltonen, J. T. Tuomi, A. P. Klapuri, S. Fagerlund, T. Sorsa, G. Lorho, and J. Huopaniemi. 2006. “Audio-Based Context Recognition.” IEEE Transactions on Audio, Speech, and Language Processing 14 (1): 321–29. |

| [Figueiredo1999] | Figueiredo, Málrio A. T., José M. N. Leitão, and Anil K. Jain. 1999. “On Fitting Mixture Models.” In Energy Minimization Methods in Computer Vision and Pattern Recognition, edited by Edwin R. Hancock and Marcello Pelillo, 54–69. Berlin, Heidelberg: Springer Berlin Heidelberg. |

| [Fonseca2018] | Fonseca, Eduardo, Manoj Plakal, Frederic Font, Daniel P. W. Ellis, Xavier Favory, Jordi Pons, and Xavier Serra. 2018. “General-Purpose Tagging of Freesound Audio with AudioSet Labels: Task Description, Dataset, and Baseline.” In Proceedings of the Detection and Classification of Acoustic Scenes and Events 2018 Workshop (Dcase2018), 69–73. |

| [Foster2015] | Foster, P., S. Sigtia, S. Krstulovic, J. Barker, and M. D. Plumbley. 2015. “CHiME-Home: A Dataset for Sound Source Recognition in a Domestic Environment.” In 2015 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), 1–5. |

| [Furui1981] | Furui, S. 1981. “Cepstral Analysis Technique for Automatic Speaker Verification.” IEEE Transactions on Acoustics, Speech, and Signal Processing 29 (2): 254–72. |

| [Gales2008] | Gales, M. J. F., and Steve Young. 2008. “The Application of Hidden Markov Models in Speech Recognition.” Foundations and Trends in Signal Processing 1 (3): 195–304. |

| [Geiger2013] | Geiger, J. T., B. Schuller, and G. Rigoll. 2013. “Large-Scale Audio Feature Extraction and SVM for Acoustic Scene Classification.” In 2013 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), 1–4. |

| [Gencoglu2014] | Gencoglu, O., T. Virtanen, and H. Huttunen. 2014. “Recognition of Acoustic Events Using Deep Neural Networks.” In 22nd European Signal Processing Conference (EUSIPCO), 506–10. |

| [Glorot2011] | Glorot, Xavier, Antoine Bordes, and Yoshua Bengio. 2011. “Deep Sparse Rectifier Neural Networks.” In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, edited by Geoffrey Gordon, David Dunson, and Miroslav Dudík, 15:315–23. Proceedings of Machine Learning Research. Fort Lauderdale, FL, USA: PMLR. |

| [Goodfellow2016] | Goodfellow, Ian, Yoshua Bengio, and Aaron Courville. 2016. Deep Learning. Cambridge, MA, USA: The MIT Press. |

| [Gygi2007] | Gygi, Brian, and Valeriy Shafiro. 2007. “Environmental Sound Research as It Stands Today.” Proceedings of Meetings on Acoustics 1 (1). |

| [Heittola2009] | (1, 2, 3) Heittola, T., A. Klapuri, and T. Virtanen. 2009. “Musical Instrument Recognition in Polyphonic Audio Using Source-Filter Model for Sound Separation.” In Proceedings 10th International Society for Music Information Retrieval Conference (ISMIR), 327–32. |

| [Heittola2010b] | Heittola Toni, Annamaria Mesaros, Antti Eronen, and Tuomas Virtanen, “Audio Context Recognition Using Audio Event Histograms,” in Proceedings of 2010 European Signal Processing Conference, (Aalborg, Denmark), pp. 1272–1276, 2010. |

| [Heittola2011] | Heittola Toni, Annamaria Mesaros, Tuomas Virtanen, and Antti Eronen, “Sound Event Detection in Multisource Environments Using Source Separation,” in Workshop on Machine Listening in Multisource Environments, (Florence, Italy), pp. 36–40, 2011. |

| [Heittola2013a] | (1, 2) Heittola Toni, Annamaria Mesaros,Antti Eronen, and Tuomas Virtanen, “Context-Dependent Sound Event Detection,” in EURASIP Journal on Audio, Speech and Music Processing, Vol. 2013, No. 1, 13 pages, 2013. |

| [Heittola2013b] | Heittola Toni, Annamaria Mesaros, Tuomas Virtanen, and Moncef Gabbouj, “Supervised Model Training for Overlapping Sound Events Based on Unsupervised Source Separation,” in Proceedings of the 35th International Conference on Acoustics, Speech, and Signal Processing, (Vancouver, Canada), pp. 8677–8681, 2013. |

| [Heittola2014] | Heittola, Toni, Annamaria Mesaros, Dani Korpi, Antti Eronen, and Tuomas Virtanen. 2014. “Method for Creating Location-Specific Audio Textures.” EURASIP Journal on Audio, Speech and Music Processing 1 (9). |

| [Heittola2018] | Heittola, Toni, Emre Çakır, and Tuomas Virtanen. 2018. “The Machine Learning Approach for Analysis of Sound Scenes and Events.” In Computational Analysis of Sound Scenes and Events, edited by Tuomas Virtanen, Mark D. Plumbley, and Dan Ellis, 13–40. Cham, Switzerland: Springer Verlag. |

| [Hershey2017] | Hershey, S., S. Chaudhuri, D. P. W. Ellis, J. F. Gemmeke, A. Jansen, R. C. Moore, M. Plakal, et al. 2017. “CNN Architectures for Large-Scale Audio Classification.” In 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 131–35. |

| [Kim2019] | Kim, T., J. Lee, and J. Nam. 2019. “Comparison and Analysis of SampleCNN Architectures for Audio Classification.” IEEE Journal of Selected Topics in Signal Processing 13 (2): 285–97. |

| [Kobayashi2014] | Kobayashi, T., and J. Ye. 2014. “Acoustic Feature Extraction by Statistics Based Local Binary Pattern for Environmental Sound Classification.” In 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 3052–56. |

| [Lecun1998] | Lecun, Y., L. Bottou, Y. Bengio, and P. Haffner. 1998. “Gradient-Based Learning Applied to Document Recognition.” Proceedings of the IEEE 86 (11): 2278–2324. |

| [Li2016] | Li, Jinyu, Li Deng, Reinhold Haeb-Umbach, and Yifan Gong. 2016. Robust Automatic Speech Recognition. Waltham, MA, USA: Academic Press. |

| [McLachlan2008] | McLachlan, Geoffrey J., and Thriyambakam Krishnan. 2008. The EM Algorithm and Extensions. 2nd ed. Vol. 382. Hoboken, NJ, USA: John Wiley & Sons. |

| [Mesaros2010] | Mesaros Annamaria, Toni Heittola, Antti Eronen, and Tuomas Virtanen, “Acoustic Event Detection in Real Life Recordings,” in Proceedings of 2010 European Signal Processing Conference, Aalborg, Denmark), pp. 1267–1271, 2010 |

| [Mesaros2016] | Mesaros Annamaria, Toni Heittola, and Tuomas Virtanen, “TUT Database for Acoustic Scene Classification and Sound Event Detection,” In 24th European Signal Processing Conference 2016 (EUSIPCO 2016), pp. 1128–1132, 2016. |

| [Mesaros2018] | Mesaros, Annamaria, Toni Heittola, and Dan Ellis. 2018. “Datasets and Evaluation.” In Computational Analysis of Sound Scenes and Events, edited by Tuomas Virtanen, Mark D. Plumbley, and Dan Ellis, 147–79. Cham, Switzerland: Springer Verlag. |

| [Mesaros2018b] | Mesaros, Annamaria, Toni Heittola, and Tuomas Virtanen. 2018. “A Multi-Device Dataset for Urban Acoustic Scene Classification.” In Proceedings of the Detection and Classification of Acoustic Scenes and Events 2018 Workshop (DCASE2018), 9–13. |

| [Mesaros2018c] | Mesaros Annamaria, Toni Heittola, Emmanouil Benetos, Peter Foster, Mathieu Lagrange, Tuomas Virtanen, and Mark D. Plumbley, “Detection and Classification of Acoustic Scenes and Events: Outcome of the DCASE 2016 Challenge,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, 26(2):379–393, Feb 2018. |

| [Mesaros2019a] | (1, 2) Mesaros, Annamaria, Aleksandr Diment, Benjamin Elizalde, Toni Heittola, Emmanuel Vincent, Bhiksha Raj, and Tuomas Virtanen. 2019. “Sound Event Detection in the DCASE 2017 Challenge.” IEEE/ACM Transactions on Audio, Speech, and Language Processing 27 (6): 992–1006. |

| [Mesaros2019b] | Mesaros, Annamaria, Toni Heittola, and Tuomas Virtanen. 2019. “Acoustic Scene Classification in DCASE 2019 Challenge: Closed and Open Set Classification and Data Mismatch Setups.” In Proceedings of the Detection and Classification of Acoustic Scenes and Events 2019 Workshop (Dcase2019), 164–68. |

| [Murphy2012] | Murphy, Kevin P. 2012. Machine Learning: A Probabilistic Perspective. Cambridge, MA, USA: The MIT Press. |

| [Ng2001] | Ng, Andrew Y., and Michael I. Jordan. 2002. “On Discriminative Vs. Generative Classifiers: A Comparison of Logistic Regression and Naive Bayes.” In Advances in Neural Information Processing Systems 14, edited by T. G. Dietterich, S. Becker, and Z. Ghahramani, 841–48. MIT Press. |

| [OShaughnessy2000] | O‘Shaughnessy, Douglas. 2000. Speech Communication: Human and Machine. 2nd ed. New York, NY, USA: IEEE Press. |

| [Oppenheim1999] | Oppenheim, A. V., R. W. Schafer, and J. R. Buck. 1999. Discrete-Time Signal Processing. Upper Saddle River, NJ, USA: Prentice Hall. |

| [Pearson1893] | Pearson, Karl. 1894. “Contributions to the Mathematical Theory of Evolution.” Philosophical Transactions of the Royal Society of London. A 185: 71–110. |