This page presents a slightly revised version of chapter "Sounds in Everyday Environments", from my PhD thesis "Computational Audio Content Analysis in Everyday Environments". The chapter explores the diverse acoustic characteristics of everyday settings and lays the groundwork for understanding how computational methods can be applied to analyze and interpret these complex soundscapes.

Publication

Toni Heittola.

Computational Audio Content Analysis in Everyday Environments.

PhD thesis, Tampere University, 6 2021.

Computational Audio Content Analysis in Everyday Environments

Abstract

Our everyday environments are full of sounds that have a vital role in providing us information and allowing us to understand what is happening around us. Humans have formed strong associations between physical events in their environment and the sounds that these events produce. Such associations are described using textual labels, sound events, and they allow us to understand, recognize, and interpret the concepts behind sounds. Examples of such sound events are dog barking, person shouting or car passing by. This thesis deals with computational methods for audio content analysis of everyday environments. Along with the increased usage of digital audio in our everyday life, automatic audio content analysis has become a more and more pursued ability. Content analysis enables an in-depth understanding of what was happening in the environment when the audio was captured, and this further facilitates applications that can accurately react to the events in the environment. The methods proposed in this thesis focus on sound event detection, the task of recognizing and temporally locating sound events within an audio signal, and include aspects related to development of methods dealing with a large set of sound classes, detection of multiple sounds, and evaluation of such methods. The work presented in this thesis focuses on developing methods that allow the detection of multiple overlapping sound events and robust acoustic model training based on mixture audio containing overlapping sounds. Starting with an HMM-based approach for prominent sound event detection, the work advanced by extending it into polyphonic detection using multiple Viterbi iterations or sound source separation. These polyphonic sound event detection systems were based on a collection of generative classifiers to produce multiple labels for the same time instance, which doubled or in some cases tripled the detection performance. As an alternative approach, polyphonic detection was implemented using class-wise activity detectors in which the activity of each event class was detected independently and class-wise event sequences were merged to produce the polyphonic system output. The polyphonic detection increased applicability of the methods in everyday environments substantially. For evaluation of methods, the work proposed a new metric for polyphonic sound event detection which takes into account the polyphony. The new metric, a segment-based F-score, provides rigorous definitions for the correct and erroneous detections, besides being more suitable for comparing polyphonic annotation and polyphonic system output than the previously used metrics and has since become one of the standard metrics in the research field. Part of this thesis includes studying sound events as a constituent part of the acoustic scene based on contextual information provided by their co-occurrence. This information was used for both sound event detection and acoustic scene classification. In sound event detection, context information was used to identify the acoustic scene in order to narrow down the selection of possible sound event classes based on this information, which allowed use of context-dependent acoustic models and event priors. This approach provided moderate yet consistent performance increase across all tested acoustic scene types, and enabled the detection system to be easily expanded to new scenes. In acoustic scene classification, the scenes were identified based on the distinctive and scene-specific sound events detected, with performance comparable to traditional approaches, while the fusion of these two approaches showed a significant further increase in the performance. The thesis also includes significant contribution to the development of tools for open research in the field, such as standardized evaluation protocols, and release of open datasets, benchmark systems, and open-source tools.

@phdthesis{phdthesis,

author = "Heittola, Toni",

title = "Computational Audio Content Analysis in Everyday Environments",

school = "Tampere University",

year = "2021",

month = "6",

abstract = "Our everyday environments are full of sounds that have a vital role in providing us information and allowing us to understand what is happening around us. Humans have formed strong associations between physical events in their environment and the sounds that these events produce. Such associations are described using textual labels, sound events, and they allow us to understand, recognize, and interpret the concepts behind sounds. Examples of such sound events are dog barking, person shouting or car passing by. This thesis deals with computational methods for audio content analysis of everyday environments. Along with the increased usage of digital audio in our everyday life, automatic audio content analysis has become a more and more pursued ability. Content analysis enables an in-depth understanding of what was happening in the environment when the audio was captured, and this further facilitates applications that can accurately react to the events in the environment. The methods proposed in this thesis focus on sound event detection, the task of recognizing and temporally locating sound events within an audio signal, and include aspects related to development of methods dealing with a large set of sound classes, detection of multiple sounds, and evaluation of such methods. The work presented in this thesis focuses on developing methods that allow the detection of multiple overlapping sound events and robust acoustic model training based on mixture audio containing overlapping sounds. Starting with an HMM-based approach for prominent sound event detection, the work advanced by extending it into polyphonic detection using multiple Viterbi iterations or sound source separation. These polyphonic sound event detection systems were based on a collection of generative classifiers to produce multiple labels for the same time instance, which doubled or in some cases tripled the detection performance. As an alternative approach, polyphonic detection was implemented using class-wise activity detectors in which the activity of each event class was detected independently and class-wise event sequences were merged to produce the polyphonic system output. The polyphonic detection increased applicability of the methods in everyday environments substantially. For evaluation of methods, the work proposed a new metric for polyphonic sound event detection which takes into account the polyphony. The new metric, a segment-based F-score, provides rigorous definitions for the correct and erroneous detections, besides being more suitable for comparing polyphonic annotation and polyphonic system output than the previously used metrics and has since become one of the standard metrics in the research field. Part of this thesis includes studying sound events as a constituent part of the acoustic scene based on contextual information provided by their co-occurrence. This information was used for both sound event detection and acoustic scene classification. In sound event detection, context information was used to identify the acoustic scene in order to narrow down the selection of possible sound event classes based on this information, which allowed use of context-dependent acoustic models and event priors. This approach provided moderate yet consistent performance increase across all tested acoustic scene types, and enabled the detection system to be easily expanded to new scenes. In acoustic scene classification, the scenes were identified based on the distinctive and scene-specific sound events detected, with performance comparable to traditional approaches, while the fusion of these two approaches showed a significant further increase in the performance. The thesis also includes significant contribution to the development of tools for open research in the field, such as standardized evaluation protocols, and release of open datasets, benchmark systems, and open-source tools."

}

Sounds in Everyday Environments

Our everyday environments are naturally full of sounds. However, not all of them are considered equally relevant to the listener. During evolution, our auditory perception has evolved to capture meaningful sounds as this was necessary for finding food, avoiding hazardous situations, and communicating with other humans [Warren1976]. The sounds in our everyday environments can be grouped roughly into three perceptual groups: speech, music, and everyday sounds [Ballas1993, Gygi2007a]. Speech can be almost always considered to refer to sound which is produced by the human speech production system and having linguistic content. It is arguably the most important sound type in our everyday environments for its use in communication and social interaction. Music, on the other hand, is structured sound organized to transmit aesthetic intent. Everyday sounds, the third perceptual group, is the most diverse in terms of sound types and contains all the other sounds from our everyday environments.

The study of auditory perception has historically mainly focused on speech and music sounds under tightly controlled experimental environments, but in the last few decades, everyday sounds have been increasingly studied. The studies take an ecological approach to auditory perception, studying the auditory perception in natural environments and focusing on events creating the sound rather than specific psychoacoustics of the sound [Gaver1993a, Gaver1993b]. Speech and music sounds have a strong temporal, spectral, and semantic structure on which the auditory perception can be based on. In contrast, everyday sounds do not have a predefined or recurring structure like speech and music, and thus audio containing everyday sounds is often referred to as unstructured audio. For everyday sounds, the meaning of the sound is commonly inferred directly based on the auditory properties of the sound (nomic mapping), whereas the perception of speech and music sounds relies more on arbitrary and learned associations (symbolic mapping) [Gaver1986]. Sequences of everyday sounds do not follow any syntactic rules like speech or music sounds, although there are some short sequences of sounds that have a meaning [Ballas1991]. The main properties of speech, music, and everyday sounds are collected in Table 2.1.

Table 2.1 Comparison of spectral, temporal, and semantic structure of speech, music and everyday sounds [Gygi2001].

| Speech |

Music |

Everyday sounds |

| General characteristics |

| produced by human speech system, analysis typically based on phonemes |

produced by musical instruments, analysis typically based on notes |

produced by any sound-producing events, analysis typically based on events |

| Spectral structure |

| mostly harmonic, some inharmonic parts |

mostly harmonic, some inharmonic parts |

unknown proportion of harmonic to inharmonic parts |

| Temporal structure |

| more steady-state than dynamic |

mix of steady-state and transients, strong periodicity |

unknown ratio between steady-state and dynamic, variable periodicity |

| Semantic structure |

| symbolic mapping, grammatical rules |

symbolic mapping, music theory |

nomic mapping, no structure or rules, some meaningful sequences exist |

This page goes through the fundamentals of everyday sound perception and focuses particularly on the aspects applicable in the computational audio content analysis research. This knowledge can be used in various stages of development to make informed design choices, whereas in the final system evaluation it provides insights on which sounds are meaningful in the context and which confusions are more acceptable than others. Furthermore, knowledge about the human categorization of everyday sounds and how sounds are organized in taxonomies can be used when designing and collecting audio datasets and creating reference annotations for audio content analysis research. For a comprehensive introduction to everyday sound perception see [Lemaitre2018, Guastavino2018].

Perception of Auditory Scenes

A sound is produced when an object vibrates and causes the air pressure to oscillate. The vibration is usually triggered by some physical action applied to the object. In the case of multiple simultaneously active sound sources, the air pressure variations caused by these sources are summed up and form an additive mixture signal. The term auditory scene is used when referring to complex auditory environments where sounds are overlapping in time and frequency.

Auditory System

The variations of the air pressure reaching the ear are converted into nerve impulses inside the ear and these impulses are analyzed in the auditory cortex of the brain. To create the nerve impulses, the sound is first converted into mechanical energy by the eardrum and then in the cochlea this mechanical energy is transformed into nerve impulses. The cochlea breaks sound into logarithmic frequency bands and each frequency band produces its own neural response, essentially producing a spectral decomposition of the sound [Pickles2012].

Psychoacoustics

Psychoacoustics, a research field combining acoustics and psychology, has established connections between the acoustic characteristics of the input signal and subjective properties of the sound perceived in the auditory system. The most common properties are pitch, loudness, and timbre of the sound. Pitch is related to the fundamental frequency of the sound, whereas loudness is related to the perceived intensity of the sound. Timbre is a multidimensional property of the sound related to the spectro-temporal content of the sound allowing sounds to be distinguished from each other. The main dimensions identified for timbre are related to the balance of energy in the spectrum (sharpness and brightness), the perception of amplitude modulation in the signal (fluctuation strength and roughness), and characteristics of sound start (onset). Timbre is an important sound property when identifying the sound sources.

Auditory Scene Analysis

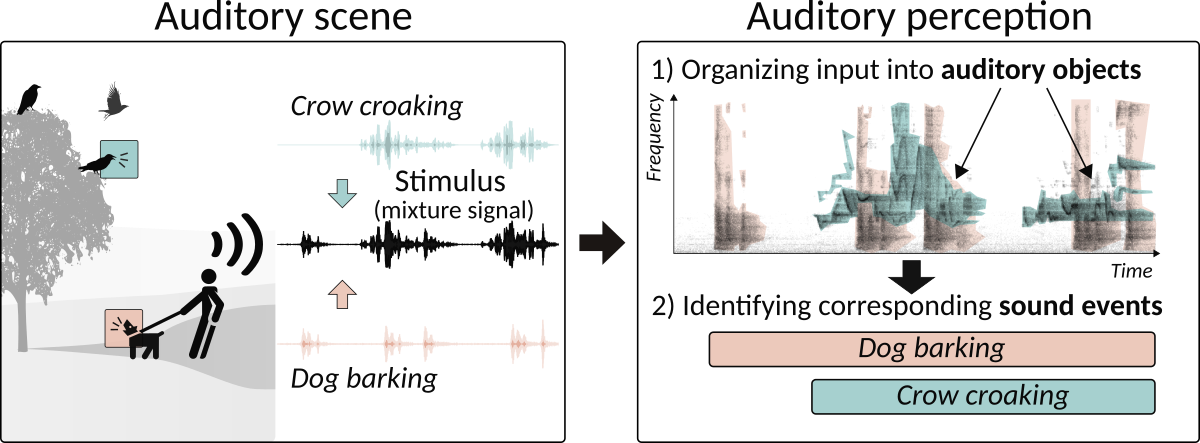

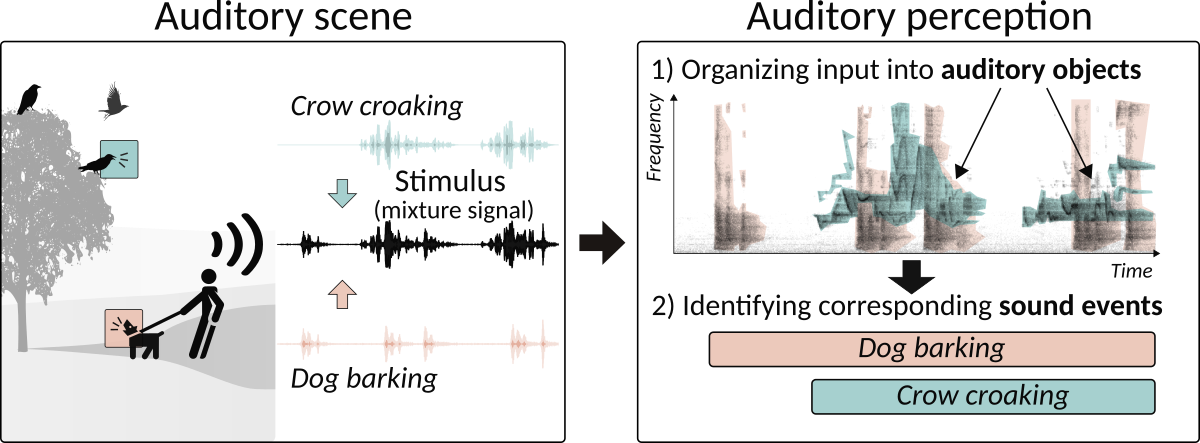

Auditory perception organizes acoustic stimuli from the auditory scene into auditory objects and identifies the corresponding sound events for them. The auditory object is a fundamental and stable unit of perception, acquired through grouping and segregation of spectro-temporal regularities in the auditory scene [Bregman1990, Bizley2013]. An overview of this process is illustrated in Figure 2.1 with two overlapping sound sources.

Organizing Acoustic Stimuli

A widely accepted theory for auditory perceptual organization, auditory scene analysis (ASA) [Bregman1990], suggests that auditory perception organizes acoustic stimuli based on rules originating from Gestalt psychology. Auditory objects are perceived as sensory entities, which are formed following primitive grouping principles based on similarity, continuity, proximity, common fate, closure, and disjoint allocation. The similarity principle groups together components sharing perceptual properties (e.g pitch, loudness, or timbre). Continuity and common fate are related to the temporal coherence across or within the perceptual properties. The continuity principle assumes sound to have only smooth variations in its perceptual properties across time; abrupt changes in these properties are considered as cues for grouping. The proximity principle groups together components close by either in frequency or time. The common fate principle looks into correlated changes in the perceptual properties, for example, grouping components having common onset or frequency modulation. Based on prior knowledge of the sound, the closure principle assumes sound to continue, even if there is another sound masking the original sound temporally, until there is perceptual evidence that the sound has stopped. Lastly, disjoint allocation refers to the principle of associating a component only into a single auditory object at a time. This primitive grouping works in a data-driven bottom-up manner. In addition, a schema-based grouping that works in a top-down manner is also proposed in ASA. The schema-based grouping utilizes the learned patterns and is commonly used with speech and music sounds. Both grouping types associate a group of sequential and overlapping components of sounds into an auditory object, and when these auditory objects are linked in time they form an auditory stream. This allows the listener to follow a particular sound source in the complex auditory scene, a feature traditionally called in the scientific literature as cocktail party effect.

Figure 2.1

An example showing auditory perception in auditory scene with two overlapping sounds.

Computational Methods

Computational methods inspired by the auditory scene analysis and human auditory perception are studied under the research field called Computational Auditory Scene Analysis (CASA) [Cooke2001, Wang2006, Szabo2016]. These methods aim to derive properties of individual sound sources from a mixture signal, and approaches used are perceptually motivated. Most studies related to CASA deal with speech and music sounds, and they usually make strong assumptions about the characteristics of the input signal. In addition, the target sound source is often assumed to be in the foreground of the auditory scene, and the task is to separate it from the background. Computational methods targeting everyday sounds cannot make such assumptions about the input signal, as the sounds are diverse in characteristics and they can be either in the foreground or background in the auditory scene. Similarly to the auditory perceptual organization, sound source separation aims to decompose a mixture signal into individual sound sources, which can be then recognized with sound event detection methods. Sound source separation will be discussed in page about Computational Audio Content Analysis; the technique was applied in [Heittola2011, Heittola2013a].

Perception of Everyday Sounds

Everyday sound perception, everyday listening, does not focus directly on the properties of sound itself. Instead, the focus is on the event which is producing the sound, and on the sound source, in order to understand what is happening in the surrounding environment. At the same time, everyday sounds are not listened to actively all the time; they are passively listened to until a sound of interest occurs, after which the auditory perception switches into active listening mode to identify the sound event. Everyday listening then segregates the perceived auditory scene into distinct sound sources and identifies corresponding sound events to these sound sources [Gaver1993b]. For example, when a car is driving on the road and passes the listener, first it catches the listener’s attention and perception enters into active listening mode; after this, perception identifies the sound source (car) and the physical action causing the sound (driving), and associates the sound with the sound event “car passing by”.

For everyday listening, identification of the sound event is essential to gain an understanding of the environment, while for the perception of speech or music the sound source is usually known already or identification has lower importance [Gaver1993b].

Sound identification can be seen as the cognitive act of sound categorization. Through categorization, humans are making sense of the environment by organizing it into meaningful categories, essentially grouping similar entities. This way, humans can handle the variability and complexity of everyday sounds and reduce the perceived complexity of the environment [Guastavino2018]. Categorization allows humans to hypothesize the event which produced the sound even if they have not heard the sound before.

Properties of Everyday Sounds

Humans can perceive properties of the sound-producing object as well as properties of the sound-producing action. In psychomechanics, a variety of experimental studies have focused on the perception of isolated physical properties of sound sources such as material [McAdams2000], shape [Kunkler2000], and size [Grassi2013], and parameters of sound-producing actions [Lemaitre2012].

Perceptual Cues

A deeper understanding of properties necessary for sound source identification can be acquired by degrading signals deliberately in various ways and studying test subjects’ identification abilities with these signals. Experiments in [Gygi2004] showed a reasonable ability to identify everyday sounds even when signals were filtered with low-pass, high-pass, or band-pass filters with varying filter cutoff or center frequencies, or when fine-grained spectral information was removed from the signals. On average, everyday sounds were found to contain more information in higher frequencies than speech. The most important frequency region for the identification was found to be 1.2-2.4 kHz, which is comparable to similar studies on speech signals. However, at the sound class level, there were large variations in the identification performance. When fine-grained spectral information was removed, the identification was mainly based on temporal information, and analysis showed that test subjects used envelope shape, periodicity, and consistency of temporal changes across frequency as cues for the identification. Again there was a large variation on the identification performance per sound class, half of the sound classes were identified correctly but some classes were not identified at all. It is worth noting that under similar conditions humans achieve near-perfect speech recognition performance [Shannon1995]. These experiments highlight the diversity of everyday sounds and that identification of a wide range of different everyday sounds requires essentially full frequency information to work robustly.

Context

Events in everyday environments do not occur in isolation, instead, they are usually happening in relation to other events and certain environments [Oliva2007]. This contextual information enables humans to accurately identify acoustically similar sounding sounds. For example, in some conditions car engine noise and purring sound of a cat can be ambiguous, and contextual information helps disambiguate between them [Ballas1991, Niessen2008].

The observations about the significance of full frequency information should be taken into account when designing an audio content analysis system for a diverse set of sound classes, and favor full-band audio. Contextual information can be used in automatic sound event detection systems to narrow down the selection of possible sound events and enable more robust detection similarly to human perception. These aspects will be discussed in Section~ref{ch4:sec:contextual-information}; contextual information was used in [Heittola2013a].

Categorization of Everyday Sounds

Isolated Environmental Sounds

Humans categorize everyday sound events mostly based on the sound source (e.g. door slam) or action which generated the sound (e.g. squeaking), and only if the sound is unknown they fall back to describing the sound based on its acoustic characteristics [Vanderveer1979, Ballas1993]. In addition, the location (e.g. shop) or context (e.g. cooking) in which the sound was heard and the person’s emotional responses about the sound (e.g. pleasantness) have been found to affect the categorization [Gygi2007b]. Similarity has an important role in categorization, and various types of similarity have been found to be used in sound categorization: the similarity in acoustic properties (e.g timbre, duration), the similarity in the sound-producing events, and the similarity in the meaning attributed to the sound events [Lemaitre2010].

Categorization

Various categorization principles operate together, and they flexibly form varying types of categories related to the sound source, the action causing the sound, or context where the sound is heard. Early theories about the human categorization process proposed that categorization is based on the similarity between internal category representations and a new entity to be categorized. Depending on the situation, the category is represented either with a prototypical example that best represents the whole category [Rosch1978], or with a set of examples for the category previously stored in the memory [Smith1981]. Categorization is done by inferring the category to a new entity from the most similar example. This results in a flexible categorization process with smooth boundaries between categories. These theories work in a bottom-up manner by processing low-level acoustic properties such as similarity, towards higher cognitive levels. Later theories extended this with a mixture of bottom-up and top-down processing where the data-driven bottom-up processing interacts with the hypothesis-driven top-down process that relies on expectations, prior knowledge, and contextual factors [Humphreys1997]. This type of processing is well suited for everyday sound perception: a person’s prior knowledge about the categories and situational factors are used while doing the identification; however if there is no established prior knowledge about the categories, the identification is done in a data-driven manner by processing acoustic properties.

Computational Sound Classification

The knowledge about the categorization principles can be utilized when designing computational sound classification systems. The acoustic model in these systems acts as a set of internal category representations in human categorization, and classification is done by matching the unknown sound to this representation. Most computational sound classification systems can be seen to work in a bottom-up manner: acoustic features extracted from an unknown sound are used to infer the class label. However, some systems are also using top-down elements, for example, the contextual information to guide their classification process. Machine learning will be discussed

in Computational Audio Content Analysis page. The contextual information usage will be discussed in Section~ref{ch4:sec:contextual-information} and was used in [Heittola2013a].

Organization of Everyday Sounds

Everyday sounds can be organized into taxonomies to assist the categorization process. In these taxonomies, the sounds are organized in a hierarchical structure according to sound sources [Guastavino2007], actions producing the sound [Houix2012], contexts where the sound can be heard [Brown2011], or combinations of these [Gaver1993b, Salamon2014]. The taxonomy proposed in [Gaver1993b] is based on the physical description of the sound production: at the highest level sounds are organized based on materials (vibrating solids, gasses, and liquids), under which sounds are organized based on actions producing the sound (e.g. impacts, explosion, or splash), and at the lower level sounds are organized based on interactions producing the sound (e.g. bouncing and waves). The taxonomy proposed in [Salamon2014] for urban sounds starts with four categories (human, nature, mechanical, and music), and leaf nodes under them are related to either sound sources (e.g. laughter and wind) or sound-producing events (e.g. construction and engine passing).

Ontologies

Everyday sounds can be organized into ontologies in which unlike taxonomies, entities can have multiple relationships within the structure. The ontology proposed in [Gemmeke2017], Audio Set Ontology, contains 632 sound events in a hierarchy with six categories at the top: human sounds, animal sounds, music, sounds of things, source-ambiguous sounds, and general environment sounds. Authors have published also a large dataset (4971 hours of audio) organized using this ontology.

Labeling

The spontaneous creation of a textual label for a sound event is two-fold: if the listener recognizes the sound event, it is described by the event producing the sound and properties of this event; if the listener cannot identify the sound event, the description is based on acoustic properties of the signal [Dubois2000]. In perceptual experiments, the process of selecting a label is often simplified by asking the listener to indicate the object (a noun) and action (a verb) causing the sound [Ballas1993, Lemaitre2010].

Computational Sound Classification

Automatic sound classification systems can use the relationships in the hierarchical structures such as taxonomy or ontology in two ways: confusions could be allowed under the parent node during the learning process, and the parent-child relationships can be used in the classification stage by outputting a common parent node when encountering ambiguous sounds. Taxonomies or ontologies can be used to increase the consistency of the set of sound classes used in the audio content analysis system by enforcing the classes to be from the same level of the hierarchy. When annotating sound events for datasets for audio content analysis research, labels are often chosen based on the object-plus-action scheme, as will be discussed in detail here and was used [Mesaros2016, Mesaros2018].

References

| [Ballas1991] | Ballas, James A, and Timothy Mullins. 1991. “Effects of Context on the Identification of Everyday Sounds.” Human Performance 4 (3): 199–219. |

| [Ballas1993] | Ballas, James A. 1993. “Common Factors in the Identification of an Assortment of Brief Everyday Sounds.” Journal of Experimental Psychology: Human Perception and Performance 19 (2): 250. |

| [Bizley2013] | Bizley, Jennifer K, and Yale E Cohen. 2013. “The What, Where and How of Auditory-Object Perception.” Nature Reviews. Neuroscience 14 (10): 693—707. |

| [Bregman1990] | Bregman, Albert S. 1990. Auditory Scene Analysis. Cambridge, MA, USA: The MIT Press. |

| [Brown2011] | Brown, AL, Jian Kang, and Truls Gjestland. 2011. “Towards Standardization in Soundscape Preference Assessment.” Applied Acoustics 72 (6): 387–92. |

| [Cooke2001] | Cooke, M. P., and D. P. W. Ellis. 2001. “The Auditory Organization of Speech and Other Sources in Listeners and Computational Models.” Speech Communication 35 (3-4): 141–77. |

| [Dubois2000] | Dubois, Danièle. 2000. “Categories as Acts of Meaning: The Case of Categories in Olfaction and Audition.” Cognitive Science Quaterly 1 (1): 35–68. |

| [Gaver1986] | Gaver, William W. 1986. “Auditory Icons: Using Sound in Computer Interfaces.” Human-Computer Interaction 2 (2): 167–77. |

| [Gaver1993a] | Gaver, William W. 1993. “How Do We Hear in the World? Explorations in Ecological Acoustics.” Ecological Psychology 5 (4): 285–313. |

| [Gaver1993b] | (1, 2, 3) Gaver, William W. 1993. “What in the World Do We Hear? An Ecological Approach to Auditory Event Perception.” Ecological Psychology 5 (1): 1–29. |

| [Gemmeke2017] | Gemmeke, Jort F, Daniel PW Ellis, Dylan Freedman, Aren Jansen, Wade Lawrence, R Channing Moore, Manoj Plakal, and Marvin Ritter. 2017. “Audio Set: An Ontology and Human-Labeled Dataset for Audio Events.” In 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 776–80. |

| [Grassi2013] | Grassi, Massimo, Massimiliano Pastore, and Guillaume Lemaitre. 2013. “Looking at the World with Your Ears: How Do We Get the Size of an Object from Its Sound?” Acta Psychologica 143 (1): 96–104. |

| [Guastavino2007] | Guastavino, Catherine. 2007. “Categorization of Environmental Sounds.” Canadian Journal of Experimental Psychology 61 (1): 54–63. |

| [Guastavino2018] | Guastavino, Catherine. 2018. “Everyday Sound Categorization.” In Computational Analysis of Sound Scenes and Events, edited by Tuomas Virtanen, Mark D. Plumbley, and Dan Ellis, 183–213. Cham, Switzerland: Springer Verlag. |

| [Gygi2001] | Gygi, Brian. 2001. “Factors in the Identification of Environmental Sounds.” PhD thesis, Indiana University. |

| [Gygi2004] | Gygi, Brian, Gary R Kidd, and Charles S Watson. 2004. “Spectral-Temporal Factors in the Identification of Environmental Sounds.” The Journal of the Acoustical Society of America 115 (3): 1252–65. |

| [Gygi2007a] | Gygi, Brian, and Valeriy Shafiro. 2007. “Environmental Sound Research as It Stands Today.” Proceedings of Meetings on Acoustics 1 (1). |

| [Gygi2007b] | Gygi, Brian, Gary R Kidd, and Charles S Watson. 2007. “Similarity and Categorization of Environmental Sounds.” Perception & Psychophysics 69 (6): 839–55. |

| [Heittola2010] | Heittola Toni, Annamaria Mesaros, Antti Eronen, and Tuomas Virtanen, “Audio Context Recognition Using Audio Event Histograms,” in Proceedings of 2010 European Signal Processing Conference, (Aalborg, Denmark), pp. 1272–1276, 2010. |

| [Heittola2011] | Heittola Toni, Annamaria Mesaros, Tuomas Virtanen, and Antti Eronen, “Sound Event Detection in Multisource Environments Using Source Separation,” in Workshop on Machine Listening in Multisource Environments, (Florence, Italy), pp. 36–40, 2011. |

| [Heittola2013a] | (1, 2) Heittola Toni, Annamaria Mesaros,Antti Eronen, and Tuomas Virtanen, “Context-Dependent Sound Event Detection,” in EURASIP Journal on Audio, Speech and Music Processing, Vol. 2013, No. 1, 13 pages, 2013. |

| [Heittola2013b] | Heittola Toni, Annamaria Mesaros, Tuomas Virtanen, and Moncef Gabbouj, “Supervised Model Training for Overlapping Sound Events Based on Unsupervised Source Separation,” in Proceedings of the 35th International Conference on Acoustics, Speech, and Signal Processing, (Vancouver, Canada), pp. 8677–8681, 2013. |

| [Houix2012] | Houix, Olivier, Guillaume Lemaitre, Nicolas Misdariis, Patrick Susini, and Isabel Urdapilleta. 2012. “A Lexical Analysis of Environmental Sound Categories.” Journal of Experimental Psychology: Applied 18 (1): 52. |

| [Humphreys1997] | Humphreys, Glyn W., M. Jane Riddoch, and Cathy J. Price. 1997. “Top-down Processes in Object Identification: Evidence from Experimental Psychology, Neuropsychology and Functional Anatomy.” Philosophical Transactions: Biological Sciences 352 (1358): 1275–82. |

| [Kunkler2000] | Kunkler-Peck, Andrew J, and MT Turvey. 2000. “Hearing Shape.” Journal of Experimental Psychology: Human Perception and Performance 26 (1): 279. |

| [Lemaitre2010] | Lemaitre, Guillaume, Olivier Houix, Nicolas Misdariis, and Patrick Susini. 2010. “Listener Expertise and Sound Identification Influence the Categorization of Environmental Sounds.” Journal of Experimental Psychology: Applied 16 (1): 16. |

| [Lemaitre2012] | Lemaitre, Guillaume, and Laurie M Heller. 2012. “Auditory Perception of Material Is Fragile While Action Is Strikingly Robust.” The Journal of the Acoustical Society of America 131 (2): 1337–48. |

| [Lemaitre2018] | Lemaitre, Guillaume, Nicolas Grimault, and Clara Suied. 2018. “Acoustics and Psychoacoustics of Sound Scenes and Events.” In Computational Analysis of Sound Scenes and Events, edited by Tuomas Virtanen, Mark D. Plumbley, and Dan Ellis, 41–67. Cham, Switzerland: Springer Verlag. |

| [McAdams2000] | McAdams, Stephen. 2000. “The Psychomechanics of Real and Simulated Sound Sources.” The Journal of the Acoustical Society of America 107 (5): 2792–92. |

| [Mesaros2010] | Mesaros Annamaria, Toni Heittola, Antti Eronen, and Tuomas Virtanen, “Acoustic Event Detection in Real Life Recordings,” in Proceedings of 2010 European Signal Processing Conference, Aalborg, Denmark), pp. 1267–1271, 2010 |

| [Mesaros2016] | Mesaros Annamaria, Toni Heittola, and Tuomas Virtanen, “TUT Database for Acoustic Scene Classification and Sound Event Detection,” In 24th European Signal Processing Conference 2016 (EUSIPCO 2016), pp. 1128–1132, 2016. |

| [Mesaros2018] | Mesaros Annamaria, Toni Heittola, Emmanouil Benetos, Peter Foster, Mathieu Lagrange, Tuomas Virtanen, and Mark D. Plumbley, “Detection and Classification of Acoustic Scenes and Events: Outcome of the DCASE 2016 Challenge,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, 26(2):379–393, Feb 2018. |

| [Niessen2008] | Niessen, M. E., L. van Maanen, and T. C. Andringa. 2008. “Disambiguating Sounds Through Context.” In 2008 IEEE International Conference on Semantic Computing, 88–95. |

| [Oliva2007] | Oliva, Aude, and Antonio Torralba. 2007. “The Role of Context in Object Recognition.” Trends in Cognitive Sciences 11 (12): 520–27. |

| [Pickles2012] | Pickles, J. O. 2012. An Introduction to the Physiology of Hearing. 4th ed. Bingley, UK: Emerald. |

| [Rosch1978] | Rosch, Eleanor. 1978. “Principles of Categorization.” In Cognition and Categorization, edited by Eleanor Rosch and B. B. Lloyd, 27–48. Hillsdale, NJ: Erlbaum. |

| [Salamon2014] | Salamon, Justin, Christopher Jacoby, and Juan Pablo Bello. 2014. “A Dataset and Taxonomy for Urban Sound Research.” In Proceedings of the 22nd ACM International Conference on Multimedia, 1041–44. |

| [Shannon1995] | Shannon, Robert V, Fan-Gang Zeng, Vivek Kamath, John Wygonski, and Michael Ekelid. 1995. “Speech Recognition with Primarily Temporal Cues.” Science 270 (5234): 303–4. |

| [Smith1981] | Smith, Edward E, and Douglas L Medin. 1981. Categories and Concepts. Vol. 9. Cambridge, MA, USA: Harvard University Press. |

| [Szabo2016] | Szabó, Beáta T., Susan L. Denham, and István Winkler. 2016. “Computational Models of Auditory Scene Analysis: A Review.” Frontiers in Neuroscience 10: 524. |

| [Vanderveer1979] | Vanderveer, Nancy J. 1979. “Ecological Acoustics: Human Perception of Environmental Sounds.” PhD thesis, Cornell University. |

| [Wang2006] | Wang, D., and G. J. Brown. 2006. Computational Auditory Scene Analysis: Principles, Algorithms, and Applications. New York, NY, USA: Wiley-IEEE Press. |

| [Warren1976] | Warren, Richard M. 1976. “Auditory Perception and Speech Evolution.” Annals of the New York Academy of Sciences 280 (1): 708–17. |