This page features a slightly revised version of the Introduction chapter from my PhD thesis, titled Computational Audio Content Analysis in Everyday Environments. The chapter provides an overview of the research motivation and outlines the key challenges in analyzing audio content from real-world settings.

Toni Heittola. Computational Audio Content Analysis in Everyday Environments. PhD thesis, Tampere University, 6 2021.

Computational Audio Content Analysis in Everyday Environments

Abstract

Our everyday environments are full of sounds that have a vital role in providing us information and allowing us to understand what is happening around us. Humans have formed strong associations between physical events in their environment and the sounds that these events produce. Such associations are described using textual labels, sound events, and they allow us to understand, recognize, and interpret the concepts behind sounds. Examples of such sound events are dog barking, person shouting or car passing by. This thesis deals with computational methods for audio content analysis of everyday environments. Along with the increased usage of digital audio in our everyday life, automatic audio content analysis has become a more and more pursued ability. Content analysis enables an in-depth understanding of what was happening in the environment when the audio was captured, and this further facilitates applications that can accurately react to the events in the environment. The methods proposed in this thesis focus on sound event detection, the task of recognizing and temporally locating sound events within an audio signal, and include aspects related to development of methods dealing with a large set of sound classes, detection of multiple sounds, and evaluation of such methods. The work presented in this thesis focuses on developing methods that allow the detection of multiple overlapping sound events and robust acoustic model training based on mixture audio containing overlapping sounds. Starting with an HMM-based approach for prominent sound event detection, the work advanced by extending it into polyphonic detection using multiple Viterbi iterations or sound source separation. These polyphonic sound event detection systems were based on a collection of generative classifiers to produce multiple labels for the same time instance, which doubled or in some cases tripled the detection performance. As an alternative approach, polyphonic detection was implemented using class-wise activity detectors in which the activity of each event class was detected independently and class-wise event sequences were merged to produce the polyphonic system output. The polyphonic detection increased applicability of the methods in everyday environments substantially. For evaluation of methods, the work proposed a new metric for polyphonic sound event detection which takes into account the polyphony. The new metric, a segment-based F-score, provides rigorous definitions for the correct and erroneous detections, besides being more suitable for comparing polyphonic annotation and polyphonic system output than the previously used metrics and has since become one of the standard metrics in the research field. Part of this thesis includes studying sound events as a constituent part of the acoustic scene based on contextual information provided by their co-occurrence. This information was used for both sound event detection and acoustic scene classification. In sound event detection, context information was used to identify the acoustic scene in order to narrow down the selection of possible sound event classes based on this information, which allowed use of context-dependent acoustic models and event priors. This approach provided moderate yet consistent performance increase across all tested acoustic scene types, and enabled the detection system to be easily expanded to new scenes. In acoustic scene classification, the scenes were identified based on the distinctive and scene-specific sound events detected, with performance comparable to traditional approaches, while the fusion of these two approaches showed a significant further increase in the performance. The thesis also includes significant contribution to the development of tools for open research in the field, such as standardized evaluation protocols, and release of open datasets, benchmark systems, and open-source tools.

Introduction

Acoustic environments surrounding us in our everyday life are full of sounds which provide us important information for understanding what is happening around us. Humans have formed tight associations between events happening around them and the sounds they produce. These associations can be represented as a textual label, to label the individual sound instances as sound events. This thesis deals with computational methods for the analysis of everyday environments. The core methods proposed in the thesis involve detecting large sets of sound events in real-life environments. In natural environments, sound events often appear simultaneously, increasing the complexity of acoustic modeling of sound events and making the detection difficult due to interfering sound sources. Furthermore, acoustic modeling cannot make strong assumptions about the sound or its structure since generally sound instances of the same sound event class can have large inter-class variability.

Along with the increased usage of digital audio in our everyday life, automatic audio content analysis has become a more and more pursued ability. Content analysis enables an in-depth understanding of what was happening in the environment when the audio was captured, and this further facilitates applications that can accurately react to the events in the environment. When work for this thesis started, there was very little prior work on computational audio content analysis directed to everyday environments. The research had been focused on tightly controlled indoor environments, such as office or meeting rooms, with a limited set of sound classes. Furthermore, the existing systems were able to detect only the most prominent sound event at each time instance. The use cases for such systems are rather limited, as most of our everyday environments are much more diverse than these works were focusing on, and being able to detect only the most prominent event limits the performance substantially.

Audio Content Analysis in Everyday Environments

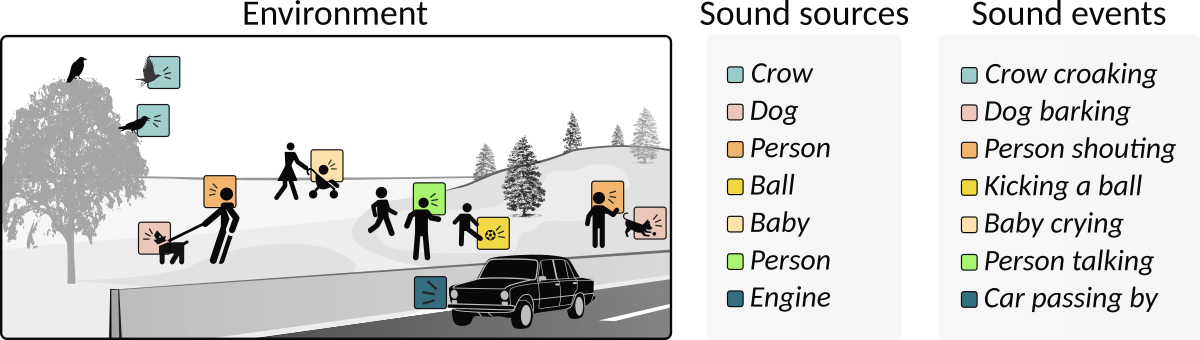

Acoustic environments surrounding us in our daily life represent different acoustic scenes defined by physical and social situations. Examples of acoustic scenes include office, home, busy street, and urban park. Everyday sound is a term used to describe a naturally occurring non-speech and non-music sound that occurs in the acoustic environment [Gygi2001]. The terms everyday sounds and environmental sounds are commonly used interchangeably in the literature. A sound source is an object or being that produces a sound through its own action or an action directed to it. A sound event is a textual label that people would use to describe this sound producing event, and these labels allow people to understand the concepts behind them and associate them with other known events. An example of an acoustic scene is shown in Figure 1.1, along with active sound sources and sound events associated with them.

Figure 1.1 Examples of sound sources and corresponding sound events in an urban park acoustic scene.

Machine listening is a research field studying computational analysis and understanding of audio [Wang2010]. In this thesis, the term audio content analysis is used for approaches especially focusing on recognition of the sounds in the audio signal. Possible sounds in the audio signal include speech, music, and everyday sounds, however, this thesis focuses only on everyday sounds. The analysis system is said to do sound event detection (SED) if it provides a textual label and a start and end time to each sound event instance it recognizes. Sound event detection systems are categorized based on their ability to handle simultaneous sound events; if the system is able to output only a single sound event at time, often the most prominent, the system is said to do monophonic sound event detection, whereas the system capable of outputting multiple simultaneous sound events is said to do polyphonic sound event detection. In practice, current polyphonic state-of-the-art systems are not modeling or outputting multiple sound event instances from the same event class which are active at the same time, therefore the polyphony is defined in terms of distinct event classes. The content analysis systems which are only outputting sound class labels without temporal activity are said to do either tagging or classification, depending on whether the system is able to output multiple classes at a time or only a single class. These systems are closely related to detection, as they can be easily extended to output temporal activity with sufficient time resolution by applying classification in overlapping and consecutive short time segments. Even though SED is defined as detecting sound event instances, current modeling and algorithmic solutions treat the problem as audio tagging with fine temporal resolution and added temporal modeling of consecutive frames. This makes the distinction between detection and tagging dependent on the application. Acoustic scene classification (ASC) is a term used for systems classifying an entire recording into one of the predefined scene classes while in sound event detection or audio tagging the predefined classes are sound classes.

Applications

Audio content analysis is applied in a variety of applications to gain an understanding of the actions happening in the environment. It can be used, for example, in acoustic monitoring applications, in indexing and searching multimedia data, in analyzing human activity, and it is supporting research in neighboring research fields such as bioacoustics and robotics.

Acoustic Monitoring

For monitoring applications, audio has many benefits over video capture: audio is often considered less intrusive than video, works equally well in all lighting conditions, does not require direct line-of-sight as video capture, and audio capture can cover large areas easily. Furthermore, computational requirements to handle audio in the analysis are far lower than for video, enabling the large-scale deployment of the monitoring applications. In surveillance and security applications, sound recognition and sound event detection can be used to monitor the environment for specific sounds, and once the sound has been detected trigger an alarm [Foggia2016, Crocco2016]. Sounds of interest for these applications include, for example, glass breaking, sirens, gunshots, door slams, and screams. In healthcare applications, the same methods can be used, for example, to analyze cough patterns [Peng2009, Goetze2012] or to analyze epileptic seizures [Ahsan2019] over long periods to assist medical care personnel. In urban monitoring, audio content analysis methods can be used to identify sounds such as sirens, drills, and street music in urban environments and analyze their correlation to the noise complaints [Bello2019]. Sound recognition methods can be used also to assign noise level measurements to the actual sound sources in the environment, enabling more accurate noise measurements [Maijala2018].

Smart Sound Sensors

Many monitoring applications use small wireless devices, sensors, to capture audio. In case the sensor also has in-built audio content analysis capabilities, these sensors are referred to as smart sound sensors. These types of sensors are used when the overall system has to easily scale up from the computational resources and the wireless communication point of view. Instead of streaming captured audio or acoustic features extracted from it to the analysis service, the smart sensors are transmitting only information about the content of the captures audio, lowering the data transmission requirements substantially. Smart sensors are commonly used in smart homes [Krstulovic2018] and smart city [Bello2018, Bello2019] applications. In smart home applications, sensors are used to collect data from a home for security purposes or to assist home automation systems. Audio can be used to detect, for example, glass breaking or dog barking to trigger an alarm. In smart city applications, sensors are collecting a variety of sensory data to help manage resources in the cities, and audio can be included by using sound recognition approaches [Mydlarz2017, Maijala2018].

Content-Based Retrieval

Content-based analysis and search functionality is an important step on the way to fully utilize online services having large repositories of audio and video content. Audio content analysis approaches can be used in these services to enable content-based retrieval of multimedia recordings [Xu2008, Takahashi2018]. In addition to search functionality, content analysis methods can be used to automatically moderate the content.

Human Activity Analysis

Human activity is often the main source of sounds in everyday environments, and this is valuable information in many applications. Activities are usually broader concepts than sound events, for example, brewing coffee, cleaning, cooking, eating, or taking a shower. Audio content analysis can be used to identify and detect these activities, either by detecting individual sound events associated with the activity [Chahuara2016] or directly detecting activity concepts [Ntalampiras2018].

Other Research Fields

Audio content analysis can be used in other research fields to facilitate analysis of the environment or interaction with the environment. Bioacoustic research is nowadays utilizing more and more audio content analysis methods [Stowell2018]. Methods can be used in wildlife population monitoring [Stowell2017], animal species identification based on their vocalizations, and these analysis results can be further utilized in biodiversity assessment of the environments [Gasc2013]. In monitoring applications for farming, audio content analysis can be used for assessing the animal stress levels [Lee2015, Du2020] and detecting symptoms of diseases [Carroll2014]. In robotics, audio content analysis methods provide important information about the acoustic environment and actions in it. Social robots, such as home service robots can use sound event recognition to facilitate enhanced human-robot interaction [Stiefelhagen2007, Janvier2012, Do2016].

References

| [Ahsan2019] | Ahsan, Istiaq M. N., C. Kertesz, A. Mesaros, T. Heittola, A. Knight, and T. Virtanen. 2019. “Audio-Based Epileptic Seizure Detection.” In 27th European Signal Processing Conference (EUSIPCO), 1–5. |

| [Bello2018] | Bello, Juan Pablo, Charlie Mydlarz, and Justin Salamon. 2018. “Sound Analysis in Smart Cities.” In Computational Analysis of Sound Scenes and Events, edited by Tuomas Virtanen, Mark D. Plumbley, and Dan Ellis, 373–97. Cham, Switzerland: Springer Verlag. |

| [Bello2019] | Bello, Juan P., Claudio Silva, Oded Nov, R. Luke Dubois, Anish Arora, Justin Salamon, Charles Mydlarz, and Harish Doraiswamy. 2019. “SONYC: A System for Monitoring, Analyzing, and Mitigating Urban Noise Pollution.” Communications of the ACM 62 (2): 68–77. |

| [Carroll2014] | Carroll, B. T., D. V. Anderson, W. Daley, S. Harbert, D. F. Britton, and M. W. Jackwood. 2014. “Detecting Symptoms of Diseases in Poultry Through Audio Signal Processing.” In 2014 IEEE Global Conference on Signal and Information Processing (GlobalSIP), 1132–35. |

| [Chahuara2016] | Chahuara, Pedro, Anthony Fleury, François Portet, and Michel Vacher. 2016. “On-Line Human Activity Recognition from Audio and Home Automation Sensors: Comparison of Sequential and Non-Sequential Models in Realistic Smart Homes 1.” Journal of Ambient Intelligence and Smart Environments 8 (4): 399–422. |

| [Crocco2016] | Crocco, Marco, Marco Cristani, Andrea Trucco, and Vittorio Murino. 2016. “Audio Surveillance: A Systematic Review.” ACM Computing Surveys 48 (4). |

| [Do2016] | Do, H. M., W. Sheng, M. Liu, and Senlin Zhang. 2016. “Context-Aware Sound Event Recognition for Home Service Robots.” In 2016 IEEE International Conference on Automation Science and Engineering (CASE), 739–44. |

| [Du2020] | Du, Xiaodong, Lenn Carpentier, Guanghui Teng, Mulin Liu, Chaoyuan Wang, and Tomas Norton. 2020. “Assessment of Laying Hens’ Thermal Comfort Using Sound Technology.” Sensors 20 (2): 473. |

| [Foggia2016] | Foggia, P., N. Petkov, A. Saggese, N. Strisciuglio, and M. Vento. 2016. “Audio Surveillance of Roads: A System for Detecting Anomalous Sounds.” IEEE Transactions on Intelligent Transportation Systems 17 (1): 279–88. |

| [Gasc2013] | Gasc, A., J. Sueur, F. Jiguet, V. Devictor, P. Grandcolas, C. Burrow, M. Depraetere, and S. Pavoine. 2013. “Assessing Biodiversity with Sound: Do Acoustic Diversity Indices Reflect Phylogenetic and Functional Diversities of Bird Communities?” Ecological Indicators 25: 279–87. |

| [Goetze2012] | Goetze, Stefan, Jens Schroder, Stephan Gerlach, Danilo Hollosi, Jens-E Appell, and Frank Wallhoff. 2012. “Acoustic Monitoring and Localization for Social Care.” Journal of Computing Science and Engineering 6 (1): 40–50. |

| [Gygi2001] | Gygi, Brian. 2001. “Factors in the Identification of Environmental Sounds.” PhD thesis, Indiana University. |

| [Janvier2012] | Janvier, M., X. Alameda-Pineda, L. Girinz, and R. Horaud. 2012. “Sound-Event Recognition with a Companion Humanoid.” In 2012 12th IEEE-RAS International Conference on Humanoid Robots (Humanoids 2012), 104–11. |

| [Krstulovic2018] | Krstulović, Sacha. 2018. “Audio Event Recognition in the Smart Home.” In Computational Analysis of Sound Scenes and Events, edited by Tuomas Virtanen, Mark D. Plumbley, and Dan Ellis, 335–71. Cham, Switzerland: Springer Verlag. |

| [Lee2015] | Lee, Jonguk, Byeongjoon Noh, Suin Jang, Daihee Park, Yongwha Chung, and Hong-Hee Chang. 2015. “Stress Detection and Classification of Laying Hens by Sound Analysis.” Asian-Australasian Journal of Animal Sciences 28 (4): 592. |

| [Maijala2018] | Maijala, Panu, Shuyang Zhao, Toni Heittola, and Tuomas Virtanen. 2018. “Environmental Noise Monitoring Using Source Classification in Sensors.” Applied Acoustics 129 (6): 258–67. |

| [Mydlarz2017] | Mydlarz, Charlie, Justin Salamon, and Juan Pablo Bello. 2017. “The Implementation of Low-Cost Urban Acoustic Monitoring Devices.” Applied Acoustics 117 (February): 207–18. |

| [Ntalampiras2018] | Ntalampiras, Stavros, and Ilyas Potamitis. 2018. “Transfer Learning for Improved Audio-Based Human Activity Recognition.” Biosensors 8 (3): 60. |

| [Peng2009] | Ya-Ti Peng, Ching-Yung Lin, Ming-Ting Sun, and Kun-Cheng Tsai. 2009. “Healthcare Audio Event Classification Using Hidden Markov Models and Hierarchical Hidden Markov Models.” In 2009 IEEE International Conference on Multimedia and Expo, 1218–21. |

| [Stiefelhagen2007] | Stiefelhagen, Rainer, Keni Bernardin, Rachel Bowers, R. Travis Rose, Martial Michel, and John S. Garofolo. 2007. “The CLEAR 2007 Evaluation.” In Multimodal Technologies for Perception of Humans, International Evaluation Workshops CLEAR 2007 and RT 2007, edited by Rainer Stiefelhagen, Rachel Bowers, and Jonathan G. Fiscus, 4625:3–34. Lecture Notes in Computer Science. Cham, Switzerland: Springer Verlag. |

| [Stowell2017] | Stowell, D., E. Benetos, and L. F. Gill. 2017. “On-Bird Sound Recordings: Automatic Acoustic Recognition of Activities and Contexts.” IEEE/ACM Transactions on Audio, Speech, and Language Processing 25 (6): 1193–1206. |

| [Stowell2018] | Stowell, Dan. 2018. “Computational Bioacoustic Scene Analysis.” In Computational Analysis of Sound Scenes and Events, edited by Tuomas Virtanen, Mark D. Plumbley, and Dan Ellis, 303–33. Cham, Switzerland: Springer Verlag. |

| [Takahashi2018] | Takahashi, N., M. Gygli, and L. Van Gool. 2018. “AENet: Learning Deep Audio Features for Video Analysis.” IEEE Transactions on Multimedia 20 (3): 513–24. |

| [Wang2010] | Wang, Wenwu. 2010. Machine Audition: Principles, Algorithms and Systems. Hershey, PA, USA: IGI Global. |

| [Xu2008] | Xu, Min, Changsheng Xu, Lingyu Duan, Jesse S. Jin, and Suhuai Luo. 2008. “Audio Keywords Generation for Sports Video Analysis.” ACM Trans. Multimedia Comput. Commun. Appl. 4 (2). |